Article summary

Recently, a hosting provider’s ‘upgrade’ dramatically changed the stolen CPU time on one of our systems. I investigated and found that our virtual machine’s CPU allocation had been deprioritized. The rest of the post describes “stolen CPU” and the behavior that we experienced on our virtual machine.

Anyone who runs operating systems (especially UNIX or GNU/Linux) in a virtualized environment has likely noticed the %steal and %st metrics in monitoring and reporting utilities. This stolen CPU metric refers to CPU time “stolen” from the given virtual machine by the hypervisor for other operations. The “stolen” descriptor is unfortunate as the CPU time really isn’t stolen in the sense of misappropriation. The metric really should be named something like “shared” or “reallocated”.

What is “Stolen” CPU?

The “stolen CPU” metric measures how much CPU time was reclaimed by the hypervisor because the virtual machine exceeded its allocated CPU time. In a virtualized environment, various virtual machines (VM’s) are allocated different amounts of time or priorities on resources such as CPU time and RAM. In some cases (RAM allocation), the allocation is very clear because the VM is given exclusive control; in other cases (CPU allocation), it’s much more hazy as the resource is shared simultaneously among many VM’s.

Calculating the actual stolen percentage based on current load is rather complex (see how it’s calculated on the IBM Power platform), but the important thing to realize is that the metric scales with CPU usage: the more CPU time that your VM uses, the larger the percentage of that time which is “stolen” or unavailable to it. The actual amount will depend on what the VM is doing and how it has been allocated CPU time or priority.

For a very simple example, let’s say that a VM has been allocated 40% of the time on a given CPU. When the VM is doing nothing (idle), none of its allocated CPU time is stolen (0%). However, if the VM is fully loaded, it will use its full CPU allocation: 40%. The VM is really using 100% of what is allocated to it, but only 40% of what the CPU has available. At this point, 60% of the CPU time is stolen from the VM. If the hypervisor did not limit how much time the VM was allowed on the CPU, the VM would have used the extra time.

Sudden Change

Recently, a few of our hosted VM’s saw a sudden increased in the %steal reported by our monitoring software (Zabbix). The initial, obvious answer to why this happened was that our VM’s were suddenly more heavily loaded). The more CPU time that VM’s use, the more time that is relatively stolen from them. One of our VM’s (in the most obvious example) had gone from averaging 0.5% steal to 8% steal. These changes were not caused by a sudden processing event (e.g. installing updates or running a task), since the change was sustained and consistent.

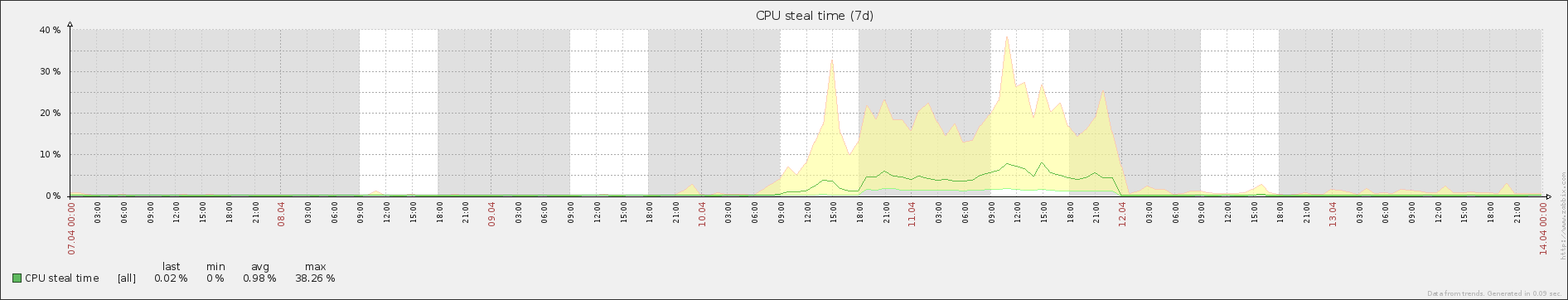

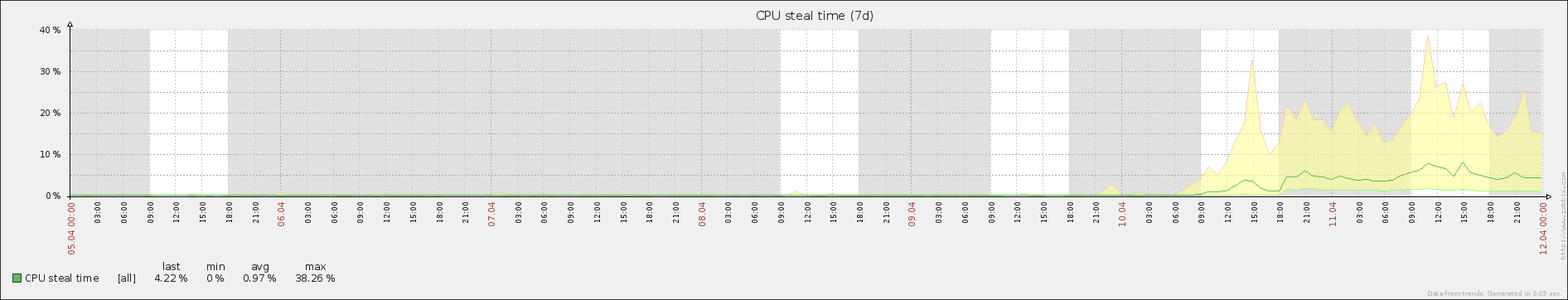

I was perplexed. The VM in question was not public facing and was used only for a relatively low-traffic internal application. The traffic (and hence load) on this VM was always very consistent and did not vary very much over the course of the day. Imagine my surprise when I suddenly saw the following graph in Zabbix:

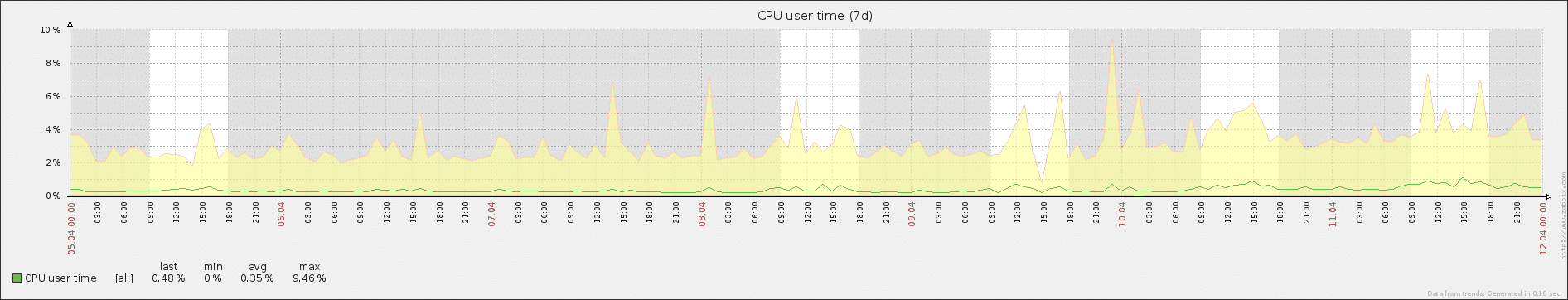

And, for comparison, here is the CPU user time graph over the same period:

If the CPU user time had spiked and stayed consistently higher over this time period, there wouldn’t have been a mystery. That would have been expected.

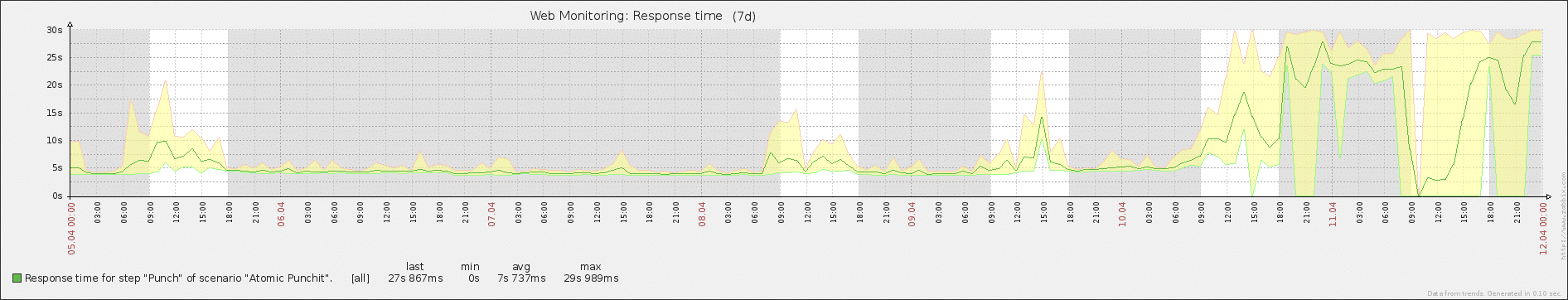

The only reason I even look at the stolen CPU graph in Zabbix was because the VM had suddenly started to perform sluggishly. While the user CPU usage was consistent with historical trends, our application was responding measurably slower.

The Root Cause

In the process of trying to figure out what had changed to cause these odd measurements, I logged into our hosting provider’s interface. I was reminded that our hosting provider was providing a ‘free’ upgrade for all plans — greater resource allocations at the same cost (but you had to “migrate” to take advantage of this). I had received an e-mail to this effect earlier in the week but hadn’t gotten around to exploring further. Now, taking the time to read, I found the reason for our stolen CPU increase.

Instead of just “bumping” all of the plans with more resources, the hosting provider had restructured their offering.

Previously, the plans were structured as follows. Our VM’s were on the 1024 plan.

| Plan | RAM | CPU Priority |

|---|---|---|

| 512 | 512 MB | 1x CPU Priority |

| 1024 | 1024 MB (1GB) | 2x CPU Priority |

| 2048 | 2048 MB (2GB) | 4x CPU Priority |

| … | … | … |

Now, they started with the 1024 plan… and went up from there:

| Plan | RAM | CPU Priority |

|---|---|---|

| 1024 | 1024 MB (1GB) | 1x CPU Priority |

| 2048 | 2048 MB (2GB) | 2x CPU Priority |

| 4096 | 4096 MB (4GB) | 4x CPU Priority |

| … | … | … |

Note, however, that the 1024 plan suddenly went from having “2x” CPU priority to having “1x” CPU priority. The 2048 plan went from having “4x” CPU priority to having “2x” CPU priority.

While the hosting provider was offering us a “free upgrade” from the 1024 plan to the 2048 plan, we had to go through a migration process. It wouldn’t be an instantaneous upgrade. Since we hadn’t yet migrated yet, our VM had essentially had its CPU priority halved. Looking back, I saw that the hosting provider had announced the plan change only a few days before: 9 April 2013. Checking my CPU steal time graphs on Zabbix, it was clear that the increase had started the day after the announcement. This was all the information that I needed.

I scheduled the upgrade migration and waited to see how it would change things. As I suspected, after the upgrade, our stolen CPU time averages were back to what they had been before, averaging around 0.5% steal. The response times of our web application similarly returned to normal, and the system no longer appeared to perform as sluggishly as it had for the past few days.

Zabbix showed the return of the % steal to normal levels:

Conclusion

Measuring stolen CPU may not be all that useful for most systems, as it usually only indicates the amount of load relative to allocated CPU resources (which usually don’t change). However, when something about the underlying CPU allocation changes, the stolen CPU metric can provide interesting insights into why systems are not performing as well as they once had. Namely, it can indicate that expected CPU allocation has changed. When you host your own virtualization infrastructure, no allocation change should really be unexpected. However, if you host with a 3rd party (AWS EC2, Linode, Rackspace, etc.), measuring the stolen CPU provides a certain level of assurance that the hosting provider has not suddenly de-prioritized your CPU allocation or made a major allocation shift.