“We’ve arranged a global civilization in which most crucial elements — transportation, communications, and all other industries […] profoundly depend on science and technology. We have also arranged things so that almost no one understands science and technology. This is a prescription for disaster. We might get away with it for a while, but sooner or later this combustible mixture of ignorance and power is going to blow up in our faces.” – Carl Sagan [1955] was an American astronomer, cosmologist, astrophysicist, astrobiologist, author, and science communicator

So what do you think? Do you… understand it? Or do we all catch ourselves at times taking the shortcut, believing hearsay, trusting that we’ll cross-reference information later? Innovation is a beautiful thing – it’s the crux of our society. But it’s also important to remember in such exciting times, with the world at our fingertips, that there are always repercussions.

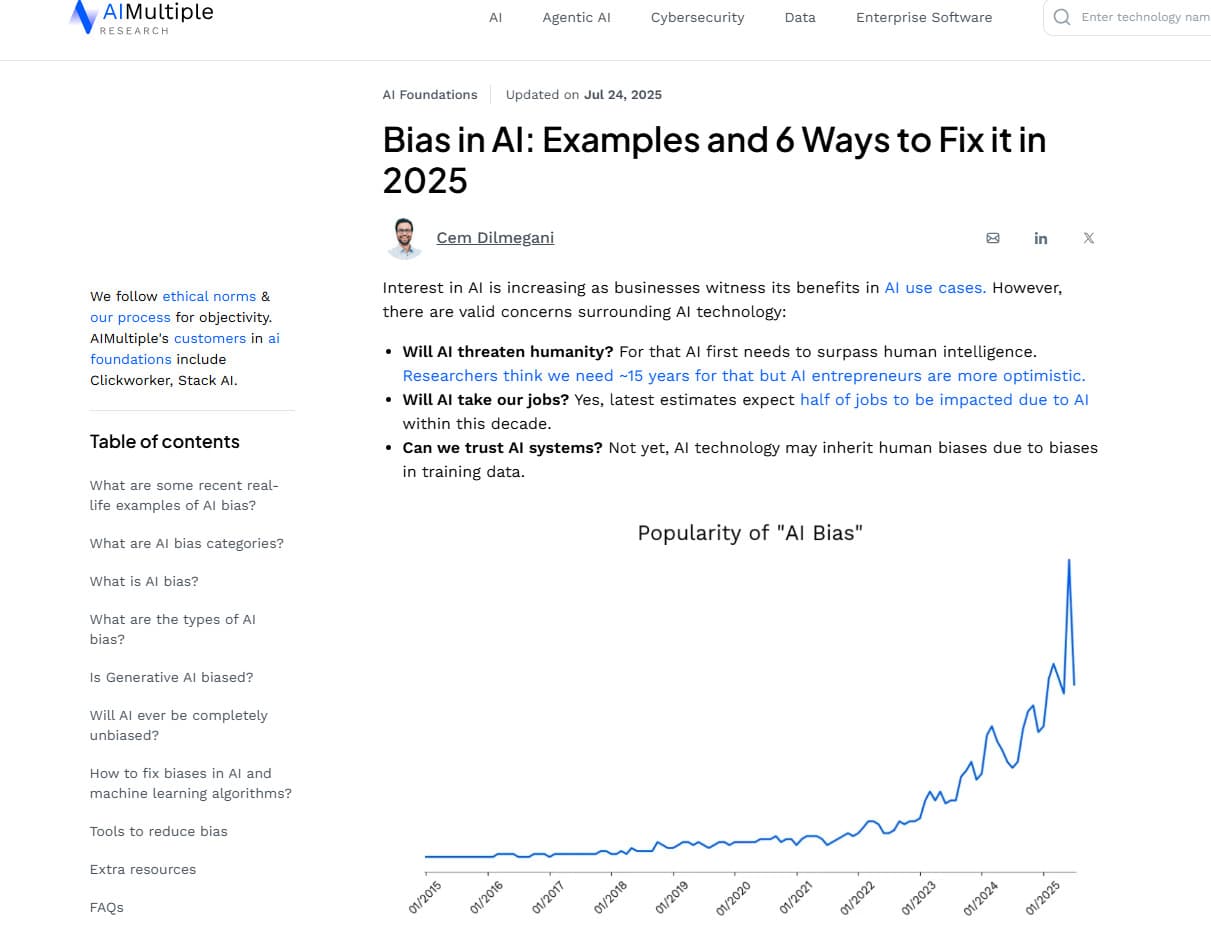

AI is one of these pivotal moments in society. It’s exciting, vast, full of potential… but only if we engage with it clearly and carefully. And, as developers, students, innovators, and leaders, I think it’s crucial to check ourselves and our neighbors’ perspectives. There are loads of amazing and exciting topics we could dive into on Generative AI (GenAI) alone, but that’s not what today is for. Today is to take off those rose-tinted glasses, slow down our wonderful future dreaming for just a moment, and truly understand what we’re working with. And while there is so much to understand, let’s just focus on one aspect of it today – bias.

Medicine isn’t safe from bias — and neither is AI.

Let’s start with healthcare, one of the most high-stakes domains AI now touches.

Generative AI is already being explored for decision support, diagnosis assistance, and patient care recommendations. But studies show that large language models – like GPT-4 – can unintentionally embed and even magnify racism, ableism, and sexism buried in their training data. These aren’t just “quirks” — they’re potentially life-threatening errors.

“GPT-4 did not appropriately model the demographic diversity of medical conditions… It consistently produced vignettes that stereotype demographic presentations… showing significant associations between demographics and differential treatment plans.” [1]

Remember: before 1993, women were barely included in clinical trials. And women of color? Even less so. Even now, research largely defaults to male subjects. That means many GenAI systems are replicating a medical lens that’s already excluding, misdiagnosing, or undertreating entire groups of people.

“The gender gap in medical research […] results in real-life disadvantages for female patients.” [2]

If GenAI continues to learn from that kind of incomplete and biased data, it doesn’t just reflect our past mistakes. It codifies them into our future.

Ableism: When AI can’t hear everyone.

I’ll admit — I was surprised when I first learned this: AI speech systems often struggle with non-standard accents, speech impediments, or vocal patterns associated with neurodivergence. And that surprise? That’s a sign of my privilege. Because this isn’t something I’ve personally had to navigate, it wasn’t even on my radar. But that’s exactly the issue. Just because a problem isn’t loud in our own lives doesn’t mean it’s not real — and harmful. And I’m someone who participates in a lot of AI-documented meetings (not just transcripts, but synthesized action items, etc.) This isn’t a technical bug. It’s a biased design – one where differently-abled people are left out of the dataset and, as a result, the output.

Text-to-image models tell a similar story. Unless explicitly prompted, they rarely generate people with visible disabilities. And if you don’t frame the phrasing just right? You risk getting offensive or distorted caricatures instead of authentic human representations.

If an AI can’t “hear” you or “see” you correctly, should it be making decisions for you? Such bigotry and biases are being radiated in ways many people subconsciously absorb, yet don’t even register that they are. GenAI isn’t exempt from this. In many ways, it’s another amplifier.

The Social Side: Learn what AI sees in us.

It doesn’t stop at ableism. A recent study on AI-generated images exposed some uncomfortable realities – and, as a woman, it’s sad to say they were not shocking. When asked to imagine doctors, lawyers, or leaders, the tested models (Midjourney, Stable Diffusion, and DALL·E2) mostly delivered white men. Women were generated as younger, prettier, and smiling more. Black individuals were rarely shown in leadership roles and often presented with less professional clothing or demeanor, if shown at all.

“All three AI generators exhibited bias against women and African Americans […] even more pronounced than the real-world labor force statistics or Google image search.” [3]

This doesn’t just reinforce stereotypes; it amplifies them. Worse, these “nuanced” biases fly under the radar. They seep into our subconscious — subtly equating youth with femininity, anger with masculinity, and race with risk or trust — all without saying a word. Now, remember that this is a tool that is rapidly getting adopted into not only our social world but educational habits.

In hiring? These models have invisibly sorted resumes based on word choices that correlate with demographics. In education? In education? They’ve recommended tutoring tracks based on patterns that mirror systemic inequalities — using proxies like zip code or language style that correlate with race and class stereotypes more than a person’s true ability. Zoom out far enough, and AI is helping to redraw social hierarchies we’ve been trying to dismantle.

So?… What can I do?

If you’re working with developing, testing, or enhancing these models directly, the answer to “So what?” is much heftier – and not something I’m going to try to fit into one blog post. Just remember that the direct responsibility of creating, influencing, and enabling GenAI’s autonomy has ramifications both expected and unknown, and should be taken seriously as such.

If you’re a student, or simply put, a learner like many of us, be critical. Be cautious. It is an amazing time to be alive, to have so much “knowledge” at our fingertips. But even superheroes have to be reminded: with great power — especially the kind encoded in lines of unseen, rapidly evolving AI — comes great responsibility. If you are going to leverage the amazing tools of this time, like generative AI, you need to own it. Understand it. If you blindly trust everything you’re told, you’re setting yourself up for failure both in the short term (cheating on homework) and long term (cheating yourself out of knowledge and confidence that you’re capable of doing and explaining such things).

In The Tech Industry

But what about for the common developer staying up on the latest innovations? I’d say it’s safe to guess you engage with generative AI, whether through questions and discussions in ChatGPT or an agent suggesting and assisting the more automated portion of your programming in IDEs like Cursor. Our sense of critical thinking, filtering, and the continued development of prompt engineering skills is pivotal to this experience. An article from KU said it well – “As with any other information, we need to evaluate AI-created material with a critical eye.” [5].

And MOST importantly, if there’s one purpose you capture from this post, let it be this message: If mankind continues to innovate but fails to understand the short-comings, consequences, and unforeseen influence of such creations, we are setting our future up for failure – which is the opposite of what innovators’ dreams are truly meant for.

“We shape our tools and thereafter our tools shape us.” — Father John Culkin

The stakes aren’t theoretical. How AI sees us will influence how we see each other — in medicine, education, politics, and everything in between. If we let bias entrench itself now, its side effects will last far longer than today’s tech hype cycle. Bias in GenAI isn’t just a technological problem. It’s a human one. And if we refuse to put both our influence and blind spots under the magnifying glass? All this innovation may hurt more people than it helps.

Let’s create boldly. But let’s also create wisely.