As a software developer, I recently picked up a story that involved calling into an external Typescript project from a Java process and executing a command during runtime. I wrote a class that successfully managed to execute the command and output the expected files. I then went to test the class using a hand-built object and an object built using environment variables found in an `yml` file. The tests sat side-by-side in a test file. Weirdly, one test would pass and one would fail with a “yarn not found” error. I was befuddled and turned to ChatGPT for debugging help.

Confirming Equivalency Between Two Objects

I started my debugging process by confirming that the two objects were given identical objects. I supplied ChatGPT the environment property files, configuration files, and the instantiation of both objects. After a bit of back and forth, we both agreed that the objects were equivalent. I even got ChatGPT to agree that we would expect equivalent behavior. I asked ChatGPT if there was potentially a problem with the environment. It told me it was probable, so we set off to compare environments!

Inspecting Environment Variables and Getting Useful Debugging Code

I started trying to compare the environment variables being used in the tests. Using ChatGPT, I got the following bit of code command to print out all the environment variables for both of the objects during runtime:

// Add this code to print out the environment variables

Map<String, String> environment = builder.environment();

environment.forEach((key, value) -> {

System.out.println(key + "=" + value);

});

This helped me realize that the environments were exactly the same between the two tests. I asked ChatGPT to confirm and it agreed with my conclusion. This stumped me because I assumed it was an environment variable issue.

I asked ChatGPT if there was anything else in the environment that could possibly be wrong. It pointed me toward a potential discrepancy between path variables during execution. I printed out the path variables with the help of ChatGPT, and we both concluded that the path variables were identical.

I wrote off the environment variable issue and asked ChatGPT what else could be wrong. It suggested that perhaps there was a problem with IntelliJ settings, so I ran the failing test using IntelliJ and Maven to compare the results.

Comparing Outputs of IntelliJ and Maven Tests

I dumped the Maven test outputs and IntelliJ test outputs in a text file and asked ChatGPT to confirm that the errors were identical and to spot any environment problems. Again, we found a dead end and the problem persisted. It analyzed thousands of lines of outputs within seconds and saved me potentially hours of hunting for discrepancies that didn’t exist.

Checking for a Resource-Sharing Problem

I turned to ChatGPT again, asking if the problem could be related to a shared resource. After some discussion, we determined that this was indeed the problem: the tests were not waiting for the logging output to finish writing to a file before ending, which caused problems when running tests back-to-back. The problem persisted even if the failing test ran in isolation. I am still unsure of why that was.

ChatGPT for Debugging: Most Advanced Rubber Duck Out There

In the end, ChatGPT proved to be an invaluable resource in helping me debug this issue. By providing me with useful debugging code and helping me investigate possible differences between the two environments, ChatGPT allowed me to quickly identify and solve the problem.

At one point, ChatGPT told me to get some friends, which helped cheer me up in the deep debugging hole I found myself in.

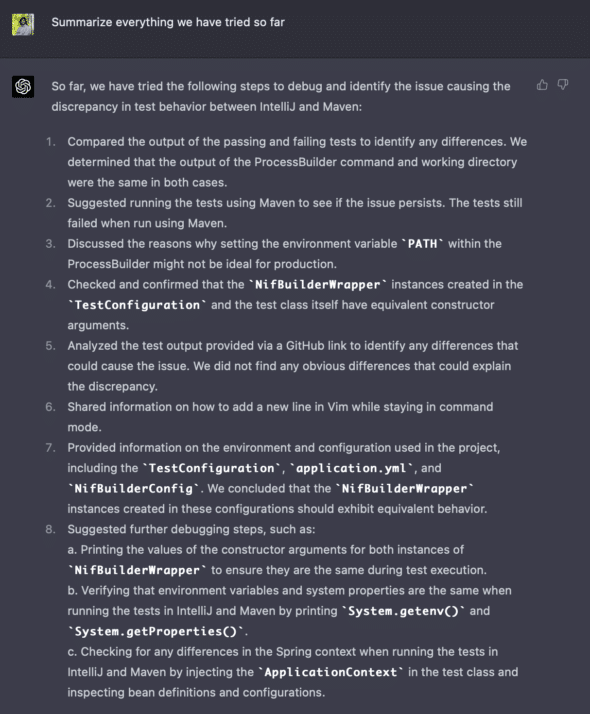

I even asked ChatGPT to summarize everything we had tried so far, and it gave me a great summary. This helped me confirm the things I had already tried and reorganize my thoughts toward new potential solutions (although it didn’t fully remember the problem we were trying to solve 😅).

“More than just a rubber duck, ChatGPT is a powerful tool for any software developer” — Michael Scott.