When you’re playing Planning Poker with a small software team, it’s pretty easy to reach a consensus. Everyone can be heard, and the team can agree on an acceptable point value for each story.

But the larger the team, the harder it is to get everyone on the same page. People stay silent with questions or comments for fear of wasting everyone’s time. Not everyone gets a chance to speak. And there’s usually a wider range of votes, so it’s hard to settle on a single number.

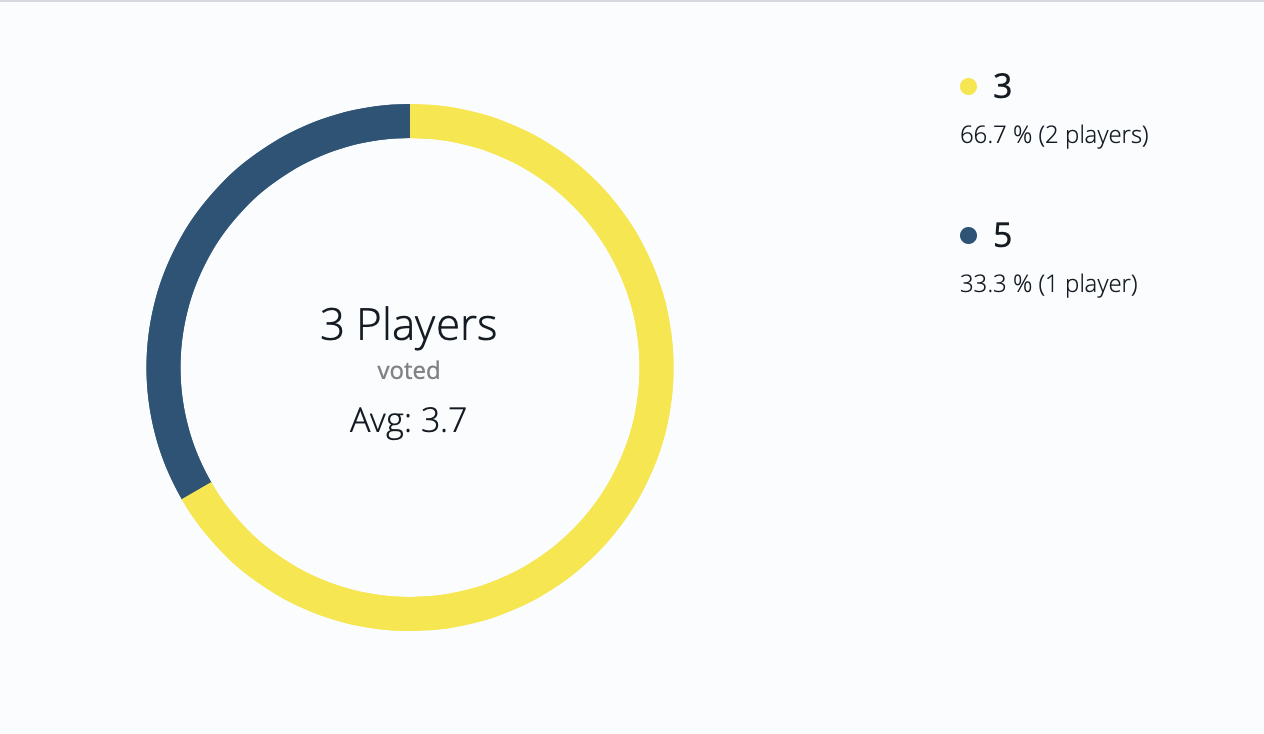

With no consensus, it’s tempting to tally up everyone’s suggestion and then calculate the average. PlanITPoker even automatically displays the average number of points for the story you’re discussing.

Averaging points seems to be a common practice. But I don’t think it’s the way to go.

Points Are Meaningless Without Context

Points are based on a story’s relative effort, risk, and complexity. Because every project is different, “2 points of effort” doesn’t implicitly mean anything, and it will likely change from one project to the next.

Even on a given project, different people will understand “2 points” in different ways. Some people always overestimate, while others underestimate. New teammates won’t understand the point scale for the team right away.

And estimation always involves a lot of guesswork, especially at the beginning of a project. You can never account for the unknown unknowns. And you’re often trying to estimate large things, which is quite difficult. For example, it’s probably easier for most people to guess a cat’s weight than an elephant’s. The same is true for story work; “User can only enter valid email addresses during user registration” is much easier to estimate than “User can register for an account” because it’s smaller and more recognizable.

In summary, if you ask a dozen people to estimate a story, you’re going to get a dozen guesses — based on a dozen slightly different understandings of “2 points.”

The Problem with Averages

I think this is why averaging everyone’s estimates seem to result in a lot of similarly-pointed stories. On one team I worked with, about 75% of stories ended up being around a 10. The team would estimate, people usually chose 5, 8, or 13, and it would almost always average out around 10.

When almost everything is a 10, how do you know what “10 points worth of effort” means? With a relative scale, a 13-point story should take about twice as much effort as a 5-point story. But is a 9.78-point story actually any more difficult than an 8.56-point story, or was it just the way the average worked out?

(This is especially frustrating when the team uses these averages in the backlog. It might be personal preference, but I don’t want to see a backlog with a bunch of random numbers. I’d prefer to keep the stories consistent with the scale we’re using.)

I think this flattening is largely due to outliers. Say the votes come in as 3, 3, 3, 5, and 20, which averages to 6.8. The majority of the team agreed that the story seemed like a 3, but the final estimate is more than twice that. The people who consistently overestimate or underestimate end up affecting the majority of stories in the backlog.

What’s the Alternative?

So if averaging story points doesn’t work, then what does? Two things come to mind.

Take the Median

It’s more representative of what people are thinking (i.e., it removes the outliers), and you can stay within your desired story point scale. With the example above, instead of a 6.8-point story, the median would result in a 3-point story, which is more representative.

Work Toward to a Real Consensus

This might be more difficult on a larger team, but it’s definitely worth it. When there are teammates that vote very low or very high, they’re probably missing some context. Rather than quickly taking the average and moving on to the next story, it’s worth your time to make sure everyone is on the same page.

Have you had success with using averages in your story points? Is there another method you use for coming to a consensus on story point values? Let me know in the comments!