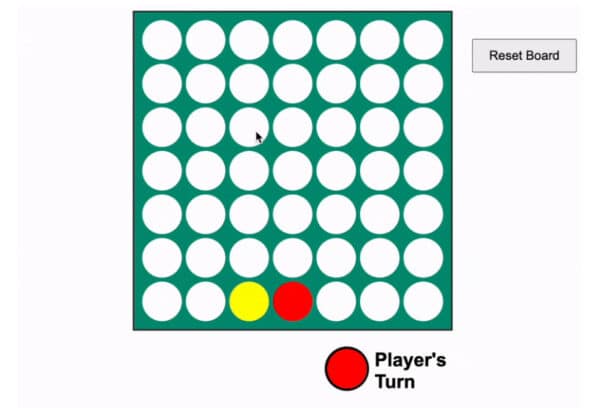

Over the past year, I’ve been exploring what it means to build a game with AI—sometimes restricting myself to unusual constraints, and other times leaning on new tools to see how far I could push the technology. In my first installment, Building a Game with AI: A 30-Minute Experiment, I explored whether I could create a playable Four-in-a-Row game with only copy/paste and an AI chatbot. In the follow-up, AI Refactoring AI: Codex Improves ChatGPT Generated Code, I revisited the project using OpenAI’s Codex, which helped add automated tests, fix winner-detection bugs, and even experiment with 45-degree rotations.

Now, for this final chapter, I decided to bring in a new wave of AI assistants—Cursor with GPT-5-High and code-supernova-1-million—to see if the project could finally realize my original vision: smooth gameplay, robust logic, and the elusive 45-degree “Plinko-style” rotations.

The Goals This Time

With the fundamentals already in place, I wanted to focus on refinement rather than just feasibility. My goals were clear:

- Solidify 45-Degree Rotations

Codex had made progress, but the logic wasn’t airtight. I wanted reliable, consistent Plinko-style drops where every piece lands exactly as you’d expect in the diamond orientation. - Fix Winner Detection Once and For All

Previous iterations sometimes determined a winner too early—while pieces were still in motion—or before the board’s UI fully updated. The goal was accuracy: only declare a winner when the board state truly reflected where pieces had landed. - Improve the User Experience

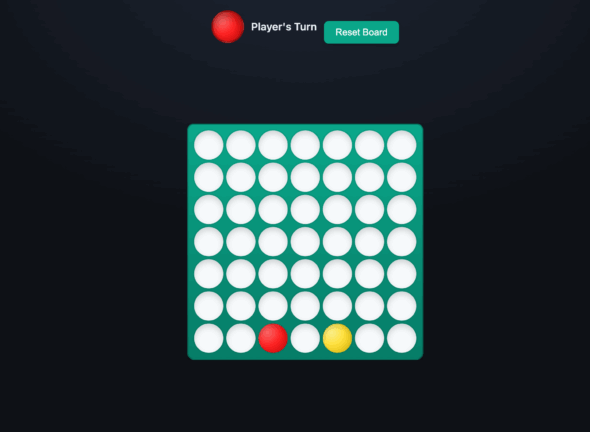

User interaction needed to pause gracefully during animations, so clicks wouldn’t interrupt or break the game mid-transition. And beyond function, I wanted some polish: a UI that felt more inviting than the flat prototype versions from before.

Achievements with GPT-5-High and Supernova

The new models succeeded in some cases, but also left a lot to be desired in others. After multiple back-and-forth iterations, here’s what came to life:

1. 45-Degree Plinko Logic Fully Working & Better Winner detection

This time, the AI managed to implement the diamond orientation cleanly. Pieces cascade downward exactly as if gravity were pulling them at an angle. Even better, the Plinko simulation continues automatically on every move when the board is rotated, creating an unpredictable—but fair—gameplay twist.

Also, by explicitly spelling out which cells should be considered after pieces “settle,” I was able to guide the AI toward fixing the premature winner-detection bug. Now, the game only declares a winner once animations finish and the board is in a final state.

3. Smoother, More Polished Gameplay

One subtle but important change: when animations are playing, the game now locks out user input. No more accidental double-clicks or mid-animation chaos. The flow feels natural, and when the animation ends, gameplay resumes seamlessly.

One of the biggest pains I felt was when trying to update the UI. Trying a simple request—“let’s make this pretty”—resulted in the AI experimenting with gradients and shading; but not every attempt worked (several rounds broke all the animations). Ultimately, most of these UI updates were scrapped as the AI could not clean up its own mess and was still unable to fully grasp the UI elements as well as the logical elements.

Lessons from This Iteration

While the latest generation of AI models was much more capable than earlier ones, the experience still required collaboration and persistence. Some highlights:

- Explicit Guidance Wins: For the Plinko logic, the models needed detailed, step-by-step explanations of which board cells mattered. Once the instructions were precise, the implementation finally clicked.

- AI Tries Too Hard: Asking for polish often led to overcomplicated animations that broke the game entirely (especially when it comes to features that are more visual than computational). Several resets were needed to scale back the ambition and keep improvements manageable.

- Patience Pays Off: Unlike the rapid-fire pace of my original 30-minute experiment, this round was iterative. The process involved testing, breaking, refining, and trying again. This gave the AI more context on things that didn’t work, which helped slowly guide the experiment towards the desired outcome.

The Endgame

Looking back, this series of experiments shows a fascinating arc of AI-assisted development:

- First, raw generation that could build a working prototype quickly.

- Second, an AI refactorer that could stabilize the project and begin exploring more complex mechanics.

- And finally, newer AI models capable of handling logic-heavy features, fixing longstanding bugs, and getting closer to adding some polish.

The result is a quirky, playable Four-in-a-Row variant that includes smooth animations, reliable winner detection, and Plinko-style rotations that make every game unpredictable and fun.

AI Levels Up

This experiment has been as much about AI’s evolution as it has been about building a game. From quick prototypes to robust testing to polished mechanics, each stage has reflected how AI is maturing as a partner in software development.

While we’re not yet at the point where AI can autonomously ship flawless, innovative games, the progress is undeniable. With each new model, the gap narrows between what I envision and what AI can deliver.

And for me, that’s the real win condition.