Article summary

Since ChatGPT launched to the public in 2022, I don’t think there is a single tech topic I’ve heard more about than artificial intelligence and machine learning. It is talked about so much that I regularly hear about it on my nominally non-tech-related podcasts. It truly has made a significant impression on the general public.

I have, for the most part, paid almost zero attention to anything AI-related since I had a graduate seminar on the topic almost 20 years ago. So I figured, shoot, what better time to tinker with it a bit? Here, I’ll share my experience starting with a public dataset, generating a model, and querying that AI model on my laptop.

Context and Goals

A small group of friends has a hobbyist project authored in C#. One thing this system does is run a regular garbage collection routine on a once-a-day timer. Since this routine affects users using the system, it is helpful to broadcast a warning that the system will be affected during the garbage collection. Rather, it is supposed to do that, but, as of now, this warning message is not being broadcast.

This leads to my secondary goal: can I use generative AI to create the C# code to implement this warning message timer? Or at least have it create a first draft?

My primary goal, of course, is to get hands-on experience with the AI domain and learn more about the vocabulary. I want to know how an AI model is created and how to query one.

Spoiler alert: I didn’t get all that far with generating meaningful C# code. But I definitely achieved my primary goal of building some language and experience around this topic.

The Experiment

The Starting Dataset

First, I needed to get example data — a dataset — of existing C# code, so I turned to Hugging Face, a site “on a mission to democratize good machine learning, one commit at a time.” Hugging Face hosts all sorts of prebuilt models and datasets created by the community, as well as creating workspaces for teams to work within.

For my local experiments, I used the codeparrot/xlcost-text-to-code Csharp-program-level dataset. This dataset has almost 11,000 records across the training, test, and validation rows. This dataset is far from large, but it’s big enough to give my laptop some work to do, making it a reasonable place to start.

Building a Model

Now that we’ve got our dataset, we can use it to tune our base AI model. In my case, I’ll be building off the GPT2 model and using the Csharp program-level dataset to tune it.

Here is where I need to come clean about something: while I know Ruby extremely well, I had effectively never touched Python until I started this experiment. With my Ruby background, I can read Python effectively, but producing Python takes some effort. And since I hadn’t used any of these AI technologies until now, I needed even more help.

Thus I turned to ChatGPT-4. I can ask GPT-4 to show me some example Python code for this. Then, I can read it and, if needed, tweak it a bit to do what I need. After asking GPT-4 to create an initial Python file for me, as well as using it to debug and address some errors, I ended up with this Python code for training my new model:

from datasets import load_dataset

from transformers import GPT2Tokenizer, GPT2Config, GPT2LMHeadModel, Trainer, TrainingArguments

model_checkpoint = "gpt2"

tokenizer = GPT2Tokenizer.from_pretrained(model_checkpoint)

tokenizer.pad_token = tokenizer.eos_token

config = GPT2Config.from_pretrained(model_checkpoint)

model = GPT2LMHeadModel.from_pretrained(model_checkpoint, config=config)

# Load your dataset

dataset = load_dataset("codeparrot/xlcost-text-to-code", "Csharp-program-level")

# Modify the tokenize_function to pad the input sequences

def tokenize_function(examples):

tokenized = tokenizer(

examples["code"],

truncation=True,

padding="max_length",

max_length=128,

)

tokenized["labels"] = tokenized["input_ids"].copy()

return tokenized

# Tokenize and pad the dataset

tokenized_dataset = dataset.map(tokenize_function, batched=True)

# Train and save the model

training_args = TrainingArguments(

output_dir="csharp_fine_tuned_gpt2",

overwrite_output_dir=True,

num_train_epochs=3,

per_device_train_batch_size=16,

per_device_eval_batch_size=16,

evaluation_strategy="epoch",

save_strategy="epoch",

logging_dir="logs",

)

trainer = Trainer(

model=model,

args=training_args,

train_dataset=tokenized_dataset["train"],

eval_dataset=tokenized_dataset["test"],

)

trainer.train()

trainer.save_model("csharp_fine_tuned_gpt2")

tokenizer.save_pretrained("csharp_fine_tuned_gpt2")

Running this code will download the dataset and models and start the tuning process. On my Apple laptop with an M1 Max chip, this takes about 75 minutes. Not terrible.

Querying the Model

Now that we’ve got a model, we can query it. I once again turned to ChatGPT-4 to give me the first draft of a program to query the model. This is what I got:

import torch

from transformers import GPT2Tokenizer, GPT2LMHeadModel

# Load the fine-tuned model and tokenizer

model = "./csharp_fine_tuned_gpt2"

tokenizer = GPT2Tokenizer.from_pretrained(model)

model = GPT2LMHeadModel.from_pretrained(model)

# Get input text from the command line

input_text = input("Enter your input text: ")

# Tokenize the input text

input_ids = tokenizer.encode(input_text, return_tensors="pt")

attention_mask = torch.ones_like(input_ids)

# Generate text

output_sequence_length = 1000 # Adjust the length of generated text as needed

output = model.generate(

input_ids,

max_length=output_sequence_length,

num_return_sequences=1,

attention_mask=attention_mask,

pad_token_id=tokenizer.eos_token_id,

)

# Decode the generated text

generated_text = tokenizer.decode(output[0], skip_special_tokens=True)

print(generated_text)

Now let’s try querying this model. I’ll start with something simple:

> venv/bin/python query_csharp_model.py

Enter your input text: sort

sort System ; class GFG { static int countPairs ( int [ ] arr, int n ) { int count = 0 ; for ( int i = 0 ; i < n ; i ++ ) { if ( arr [ i ] % 2 == 0 ) count ++ ; } return count ; } public static void Main ( ) { int [ ] arr = { 1, 2, 3, 4, 5 } ; int n = arr. Length ; Console. WriteLine ( countPairs ( arr, n ) ) ; } }

Hmm, that definitely looks like something that might be sort of related. Since my original goal was to get some code related to timers, let’s try that now:

> venv/bin/python query_csharp_model.py

Enter your input text: timer

sort System ; class GFG { static int countPairs ( int [ ] arr, int n ) { int count = 0 ; for ( int i = 0 ; i < n ; i ++ ) { if ( arr [ i ] % 2 == 0 ) count ++ ; } return count ; } public static void Main ( ) { int [ ] arr = { 1, 2, 3, 4, 5 } ; int n = arr. Length ; Console. WriteLine ( countPairs ( arr, n ) ) ; } }

Err, ok. That’s the same output I got before and doesn’t have anything to do with timers. Let’s try one more thing:

> venv/bin/python query_csharp_model.py

Enter your input text:

sort System ; class GFG { static int countPairs ( int [ ] arr, int n ) { int count = 0 ; for ( int i = 0 ; i < n ; i ++ ) { if ( arr [ i ] % 2 == 0 ) count ++ ; } return count ; } public static void Main ( ) { int [ ] arr = { 1, 2, 3, 4, 5 } ; int n = arr. Length ; Console. WriteLine ( countPairs ( arr, n ) ) ; } }

Here, I simply gave it a blank space as input. And I got the same output. Clearly, something is wrong here.

Understanding the Results

So far, the experiment has been fun, but I haven’t gotten any meaningful results — at least in terms of code. Let’s see if ChatGPT can help us understand why.

I started a conversation with ChatGPT and gave it the code. I asked it a couple of preliminary questions to confirm it understood what I’m trying to do, and then I asked it this:

“Why do all three input texts give the same result?”

ChatGPT’s answer:

The reason all three input texts give the same result might be due to the limitations of the fine-tuned GPT-2 model itself. Some possible reasons include:

- Insufficient training data. The model may not have been trained on a diverse enough dataset to cover various scenarios or might lack enough examples to generate contextually relevant code for different inputs.

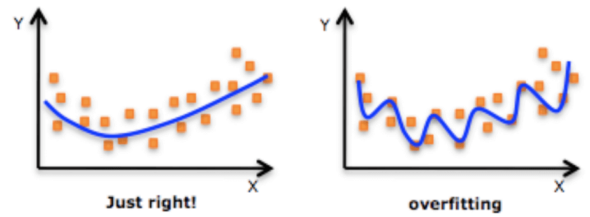

- Overfitting. The model might have overfit to the training data, causing it to generate similar code snippets even when the input text is different.

- Limited understanding of input. The model might struggle with understanding the context or intent behind the input text, leading it to generate unrelated code snippets.

- Inadequate fine-tuning. The fine-tuning process may not have been sufficiently optimized or run for enough epochs to effectively adapt the model to the specific task of generating C# code snippets.

To improve the model’s performance, you could try increasing the amount of training data, refining the fine-tuning process, or using a different model architecture that is more suitable for the task at hand.

This makes sense. I think reason #1 is the main culprit. My dataset of ~11,000 C# samples is not large. And I also suspect that reason #1 feeds into reason #2.

Next Steps?

While I didn’t get any usable C# code out of this experiment, that was a secondary goal. I achieved my primary goal — building vocabulary and understanding around this process. It was a fun learning experience to build and query my own AI model locally.

The next thing I’d consider trying is using a bigger C# dataset and running the fine-tuning on a larger system, e.g., another computer I have with a 3080 Ti GPU. Or perhaps I’d use something significantly beefier, like Amazon’s Titan system.

The amount of progress in the AI space in the last few years is astounding. I look forward to seeing what the future brings.