Almost every standard library (in almost every programming language) comes with a set of advanced math functions (sin(), cos(), sqrt(), arctan(), etc…). However, (very) occasionally you need an implementation of these functions more closely tuned to your use case. How does one go about implementing these functions? This post attempts to explain how!

These functions have a special name: Transcendental. In this context transcendental basically means that there is no easy, direct (algebraic) way to compute these functions. You have to use an iterative method, starting with a very rough approximation and gradually improving (hopefully) the accuracy with each step.

There are two tools that I’ve used to implement transcendental functions: Taylor series and Generalized Continued Fractions (Generally the generalized continued fractions are superior). Some functions (square root for example) have more specialized algorithms that have much better convergence properties than their Taylor series and generalized continued fraction equivalents. So as always, make sure you thoroughly google the function you are implementing to find existing algorithms / implementations that suite your purposes.

The basics:

Here is what arctan(z) looks like as a Taylor series (pictures gratefully borrowed from wikipedia):

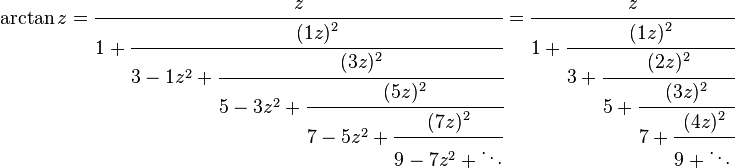

Here is what it looks like as a generalized continued fraction:

Taylor Series and generalized continued fractions are really two flavors of the same thing. Supposedly there is a way to convert between the two (and it looks like there is an algorithm here) but I haven’t found a good explanation for how to do so.

Taylor series and generalized continued fractions have very similar capabilities and limitations. Both are infinite “series” where each next piece (hopefully) provides a successively more accurate approximation. Both are most accurate (or converge the fastest) for inputs around a particular value. The further away the input is from that point, the more iterations are needed to get a certain level of accuracy. When you (or more likely, wolfram alpha) derives a Taylor series expansion, you can actually pick where you want this point to be . For some functions the expansions are only valid within a specific range. For example, both the Taylor series expansion and the generalized continued fraction for arctan(x) only give useful results for abs(x) <= 1.

But usually, we want our functions to work, and give good accuracy across a wide range of values. Usually this is done by making clever use of Identities. For example, for arctan, there is a very convenient trig identity:

![]()

which lets us convert an arctan(x), where abs(x) > 1 to pi/2 +- arctan(1/x) where abs(1/x) <= 1.

Similar things can be done with the other trig functions. Since sin and cos are periodic, we actually only need to be able to compute the range between 0 and pi/2. We can use trig identities to map inputs outside that range to an equivalent input within that range.

So enough talk. Lets get to some examples:

Here is an implementation of arctan(x) using a Taylor series:

Note: j and k are always integers, and n is the number of iterations to perform.

# taylor_arctan.py

def taylor_arctan(n, x):

approx = 0

for k in xrange(n):

j = 2 * k + 1

approx += ( (-1)**k * x**j ) / j

return approx

Here is an implementation of arctan(x) using a generalized continued fraction:

def continued_faction_arctan(n, x):

x2 = x*x

d = n * 2 + 1

for k in range(n, 0, -1):

f = k * 2 - 1

d = f + k*k*x2/d

return x / d

The implementation is a bit trickier, but you don’t have to take large powers of x, only basic arithmetic and a loop.

Also, notice that with a generalized continued fraction, when you want to stop, you just pretend that the next fraction in the “tower” is zero and call it good.

Both implementations need a wrapper function in order for them to work for inputs abs(x) > 1:

def arctan(n, x):

if x > 1.0:

return math.pi/2.0 - my_preferred_arctan_implementation(n, 1/x)

else:

return my_preferred_arctan_implementation(n, x)

Tada!

There is obviously a lot more that could be covered on this topic, but hopefully this will give you enough to start on.

Some parting advice:

- Make sure you really need to implement your own.

You’re about spend a lot of time trying to replace an existing, working, heavily tested, out-of-the-box solution. Make sure that you really do need something different. - Make some good tests.

This is usually pretty easy. You can generally use the math functions provided by your standard library for comparison. Just generate a few million test input values and pass them to both your “standard” function and to your custom version, and compare the outputs. As usual, be sure to test the critical points, and extreme values as those will be the most problematic. - If you’re using a datatype that is more restrictive than a float (like a fixed point type), I would recommend trying to get your basic algorithms working in a scripting language using floats first. And then once you know you have a working algorithm, try to port the algorithm to the desired language and types.

- Google, Wikipedia and WolframAlpha are your best friends.

I think you have a typo in the first gist, correct code as follow:

def taylor_arctan(n, XyZ):

approx = 0

for k in xrange(n):

j = 2 * k + 1

approx += ( (-1)**k * XyZ**j ) / j

return approx.

Also, have you used CORDIC before? This is another way to do it that required iteration as well, but I believe is a bit less tax-ish, that sometimes is important if you are working in an embedded enviroment.

Thanks, fixed.

I’ve looked at CORDIC but haven’t used it. For my particular use case I wanted something simple and I didn’t want to have to store the lookup tables (We were already nearly maxed out on memory usage). Accuracy was also less of a concern.

For the power series, you don’t want to calculate it in the straightforward way that you’ve shown. You’re subject to roundoff error, and you waste a lot of computing power. The classic trick for summing power series in a computer is, if you want to sum k terms of a_n * x^n, write it as (..(a_k*x + a_{k-1}) * x + a_{k-2}) * x + …)… + a_0. In code, assuming definitions of a and x,

sum = a(k) * x for i in range(k, -1, -1): sum = (sum + a(i)) * xIf you haven’t read Acton’s ‘Real Computing Made Real’, I cannot recommend it highly enough.

Hi!

Everything OK but when implementing this kind of thing, I’d rather make sure the final product is IEEE-754 compliant than any other thing. Otherwise you end up with ugly, nasty and error-prone problems like sqrt{2}*sqrt(2)-4!=0

But you probably already know this.

Pedro.

Job,

There’s also a typo in the continued fraction gist. The line

f = k * 2 + 1should be

f = k * 2 - 1Thanks, fixed.

[…] April 25th – Implementing Advanced Math Fuctions […]