Organizing a project’s architecture into layers is a common strategy. But I’ve noticed, over the course of my relatively short career, that a layered architecture becomes counter-productive when a project reaches a certain age or size (I’m not sure which).

When the Layer Model Works

The idea behind a layered approach is nice: any particular layer of the software should be interchangeable with another layer that implements the same interface. This helps to drive the code base to a certain level of reuse.

The idea behind a layered approach is nice: any particular layer of the software should be interchangeable with another layer that implements the same interface. This helps to drive the code base to a certain level of reuse.

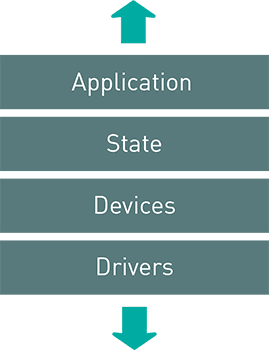

In general, the layers are stacked. The rules are that a layer may only depend on the layer below it. It may not call into the layer above, and it cannot call into any layers other than the layer underneath it.

In simple cases, this model works out well. There’s a cleanly defined system that’s easily modeled in our minds.

And When it Doesn’t

But problems start to show up when the project grows. One of the first things I notice is that the layer model becomes counter-productive to reusability; for instance, strictly following the layer model leaves us without a good home for utility code that should be used across several layers. Either the utility code will end up duplicated in undesirable ways or it will be added as an exception to the layer model.

Another problem shows up when software has to interact with larger libraries that seem to span many layers. If this is third-party code, it will very likely be another exception to the layer model tacked onto the side of the project along with the utility code. In other words, unless the developers have a policy of writing every bit of software they’ll end up depending on, the layered approach can’t hope to be consistently applied across a project that depends on third party libraries.

But, I think the largest problem facing a layer model is that the layers start to mix together under the weight of the project. Higher layers may well start depending on implementation details in lower layers. The abstractions of the layers start to leak badly. Now, I believe that all abstractions leak, but some in better ways than others. In particular, I’m worred about the ability of layers to actually be interchangable with something of an identical interface. This seems like something we should be able to validate, but validating this property seems non-obvious to me.

An Alternative: Decoupling by Loosening Linker Dependencies

I’ve been using a different pattern to write much of my C software lately. It’s modeled on the concept of Dependency Injection. To me, it’s been valuable because it has allowed me to be more explicit about the interface on which my software depends. This pattern is really just an intentional use of callbacks in place of link-time dependencies. This, of course, has trade-offs, but I’ve started to value explicit interfaces much more than I value a reduction in indirection.

Requirements

In practice, injecting a modules dependencies into the system requires a few things:

- Types to describe the functions and data that the modules will depend on.

- An initialization routine that passes a collection of these types to the module.

- Glue code on the outside to wire all the dependencies together (this, to me, is application code and the non-glue is library code).

- Optionally (but highly recommended), a handle that carries around the state for a particular initialized instance of the module.

Benefits

An important property comes out of writing modules this way: we should be able to compile and link the module (and only the module) against a program with an empty main without any failures. This proves to us that we’re not inadvertantly dependening on the outside world through a named dependency. There are lots of other neat properties (like a pretty strong guarantee of non-interaction) that fall out of this pattern as well, but that’s beyond the scope of what I’m covering here.

An Example

I’ve prepared a small example that demonstrates this pattern. You can find the code on my GitHub account under the inversion_demo project. It’s broken into several sections:

- A Makefile

- Source Code

- Tests

- A Demo Application

The Makefile provides several build tasks that can be used to demonstrate the different aspects of the program. One task builds the demo, another runs tests, and the last builds the module against a minimal main to demonstrate the independence of the code. In the source code, you can inspect how I’ve designed the interface that’s both exposed to the user to use and the interface that the module expects to be passed. The tests just validate that the modules objectives are reached. The demo application shows how the application would be used in a ‘Real World’ situation.