Our Grand Rapids office has had bathroom monitors for longer than I’ve been working here (the kind of monitors that let you know when a bathroom is free; not the kind that tell the boss how you spend your time!) What started as a hobby project has become an integral part of the office culture: guests are informed of the red/green status indicators and the convention of leaving the door open when exiting.

Over time, some parts of the system stopped working and were abandoned. I had an idea for getting it working again, but it would involve learning some networking and IoT techniques that I wouldn’t normally encounter in my daily work. Side projects like this are great for broadening knowledge and staying sharp.

An Abandoned Bathroom Monitor System

The original “callaloo” system, made from custom radio hardware, was eventually replaced by “pottymon” using ESP8266 microcontrollers. The Ann Arbor office even got a similar system.

But despite the network connectivity, bathroom statuses were only ever displayed on our radiators. Fast-forward a few years, and the bathroom monitor deteriorated from a lack of interest. The radiator runs on screens mounted right outside the bathrooms on each floor, so the bathroom statuses became more of a novelty than anything useful. Eventually, the bathroom status on the radiator became unreliable, and it was removed. The Mac Mini “hub” that all of the microcontrollers reported to was decommissioned. The lights above the bathroom doors still worked fine, so nobody really missed pottymon.

In our current office, the lights above each bathroom door are visible from most of the floor. But eventually my desk ended up in a spot around a corner, so I couldn’t see any of the bathroom status lights. But I was curious if the pottymon system could be resuscitated. What I really wanted was an icon in my Mac OS menubar to display the status of all the bathrooms – so I could make an informed decision about whether the try for one on a different floor!

Proof of Concept

I wasn’t even sure if the microcontrollers inside the bathroom door sensors still worked. So I looked up some documentation on the last-known state of the system. The way it worked was that each bathroom had a ESP8266 board which would read the state of the door once per second. It could connect over wi-fi to an MQTT broker and report its status.

The machine that had been running the MQTT broker had been decommissioned, but the documentation included its static IP address. So as a quick test, I added the IP address to my own MacBook and started WireShark to see if any of the microcontrollers would try to connect. And sure enough, I got a few incoming connection attempts!

Encouraged by how easy this might be, I installed mosquitto, a lightweight MQTT broker. Within a few seconds, the server received inbound connections, and I had clients publishing messages of “closed” or “open” to topics like “callaloo/upstairs-east” and “callaloo/downstairs-south”.

As a proof of concept, I wrote a Ruby script to subscribe to the MQTT server and display the status of each room.

![]()

I was still skeptical of the sensor reliability, so I included timers showing how long since the status had last changed. Additionally, a room that hadn’t been heard from in over 10 seconds would change to “unknown” (yellow). But everything seemed to be working great, and for a few days, I was satisfied just to have a sliver of a terminal window running to keep an eye on the bathroom statuses. But then something started to go wrong.

MacGuyver Territory

Occasionally, a room status would be “unknown” for a long period of time. Looking at the MQTT broker, I could see that no updates were being received. And debugging further, I saw that the MQTT broker was forcing clients to disconnect, saying that another client with the same name was already connected.

After doing some more debugging with WireShark, and reading about the MQTT protocol, I narrowed the problem down to the initial connection setup. When an MQTT client first connects, it includes a name to uniquely identify itself. If the MQTT broker sees a client with the same name connecting twice, it will drop the first connection.

Each microcontroller had been configured with the name of the room it was attached to, like “upstairs-east”. But for some reason, sensors would occasionally connect with some default name, “ESP8266Client”. And if more than one did that simultaneously, only the last one would win.

This was a problem because I didn’t have access to the firmware running on the microcontroller. And I wanted to avoid re-flashing new firmware if possible. After scouring the mosquitto documentation for an option to allow multiple clients with the same ID, I discovered that this is intentional behavior built into the MQTT protocol itself.

It was getting into MacGuyver territory, but I figured that if I could rewrite the incoming connection request on the fly, I could ensure each client was using a unique ID. This seemed like yet another job for socat!

Using Socat

After writing a little utility rewrite.rb to do string replacement within a binary stream, I incorporated it into a proxy of sorts using socat:

proxy.sh

socat TCP4-LISTEN:1883,fork EXEC:./intercept.sh

Port 1883 is what an MQTT broker listens on by default, so I needed to reconfigure the actual MQTT broker to listen on a different port (I picked 1882). So when a client connects, socat will invoke ./intercept.sh, connecting its STDIN and STDOUT to the network stream.

intercept.sh

./rewrite.rb ESP8266Client peer${SOCAT_PEERADDR} | socat STDIO TCP:localhost:1882

When socat runs a program with EXEC, it conveniently sets a few environment variables. SOCAT_PEERADDR is the IP address of the connecting client. So now rewrite.rb is replacing any occurrences of “ESP8266Client” with something like “peer10.0.1.52”. It’s critical that the length of the replacement string is the same as the original since this network stream is full of byte counters (no null-terminated strings). It turns out that these microcontrollers were given statically-assigned IP addresses, so I didn’t have to worry about the length changing unexpectedly.

Finally, the rewritten stream is sent through another instance of socat to the local MQTT broker. Because socat forwards both STDIN and STDOUT, responses from the MQTT broker are returned to the original client without modification.

Status Updates for All

With all of this in place, I was able to get reliable bathroom status updates! This was great for me, but ultimately, I wanted it to be usable by anybody in the office. So the next step was to devise a mechanism to distribute the status to an arbitrary number of watchers.

The naive approach would be to just expose the MQTT broker and let anybody connect to it. But with five bathroom sensors each sending an update once per second, and say fifty clients connected, that would be a lot of redundant traffic. Nevermind how small these packets would be, it just seemed excessive. Plus, by operating exclusively on a local network, this was the perfect opportunity to try multicast networking.

IPv4 reserves quite a large block of addresses for multicast: 224.0.0.0 through 239.255.255.255. Originally, multicast was intended to be used as a sort of pub-sub for the entire Internet, though most routers these days won’t forward multicast traffic. But the way it works is that a host “subscribes” to a particular multicast address. If configured, routers on the network segment must keep track of these subscriptions to forward traffic for relevant multicast addresses. On a single network segment (like my office), it’s a bit simpler, and multicast operates mostly like broadcast.

Even so, I liked the idea of a hub coalescing the status updates from all five sensors and broadcasting a single update once a second. Interested applications on the network could subscribe to this broadcast without the overhead of maintaining persistent (and redundant) connections to the hub.

Adding Ruby

Getting this to work was more straightforward than I imagined. I was still using my own MacBook as the hub, so I decided to write the transmitter in Ruby. Here’s the relevant snippet for sending multicast UDP datagrams:

MULTICAST_ADDR = "239.192.0.100"

MULTICAST_PORT = 1234

sock = UDPSocket.new

payload = "some data payload"

sock.send(payload, 0, MULTICAST_ADDR, MULTICAST_PORT)

This is pretty much like sending a UDP datagram to any other IP address. Much of the multicast range is reserved for various purposes, but the 239.192.0.x range is available for private use (I appreciate that the 192 is reminiscent of the 192.168.0.x private unicast range).

On the receiving side, it takes a few more steps because you’re not just binding a socket to an address already on the host. First, you need to become a member of the multicast group.

require "socket"

require "ipaddr"

MULTICAST_ADDR = "239.192.0.100"

MULTICAST_PORT = 1234

BIND_ADDR = "0.0.0.0"

sock = UDPSocket.new

membership = IPAddr.new(MULTICAST_ADDR).hton + IPAddr.new(BIND_ADDR).hton

sock.setsockopt(:IPPROTO_IP, :IP_ADD_MEMBERSHIP, membership)

sock.bind(BIND_ADDR, MULTICAST_PORT)

payload, _ = sock.recvfrom(1024)

I am binding to 0.0.0.0, which is an alias for “all network interfaces”. After a few lines of membership setup we have a more typical bind call so we can listen on the port. Finally we wait for a datagram with sock.recvfrom (1024 is the size of the receive buffer; UDP tends to be more reliable with smaller packet sizes).

With this in place, I could start having other people in the office run the “watcher” script to receive status updates. But this all needed a more permanent home, and a nicer interface.

Setting up the Raspberry Pi

It would have been interesting to get it running on the smallest hardware possible, since the processing power required is pretty minimal. But we had an unused Raspberry Pi, which is the quintessential device for tinkering with IoT (Internet of Things) applications. A Raspberry Pi is vastly overpowered for this use case, but since it runs Linux, everything I had set up so far would just work after installing a few packages.

I installed the “Lite” variant (one with no graphical desktop environment) of the Raspberry Pi OS to keep things simple. Then I just needed to install mosquitto (the MQTT server package), socat, and ruby to run my scripts.

Mosquitto comes with its own startup script, but I needed to craft some additional scripts to start up the proxy and transmitter services. This particular flavor of Linux uses the Systemd to manage services, so I needed to create a new service file in /etc/systemd/system. This is the config I came up with for the transmitter, pottymond-transmitter.service:

[Unit]

Description=Pottymon hub transmitter

Wants=network.target

[Service]

Type=exec

Environment="POTTYMON_PORT=1034"

WorkingDirectory=/home/atomic/pottymon/hub

ExecStart=bundle exec ruby transmitter.rb

Restart=on-failure

RestartSec=10

[Install]

WantedBy=multi-user.target

Most of the options are pretty self-explanatory, although the service dependencies can be tricky. Fortunately, there are lots of examples of network-dependent services, so that ended up pretty straightforward as well: pottymond-transmitter is dependent on network, and multi-user is “dependent” on pottymond-transmitter (think of multi-user as a pseudoservice that is responsible for starting up all the other services simply by depending on them).

Then I just needed to reload systemd to get it to recognize my new config, and then enable the service so it will start automatically on boot:

sudo systemctl daemon-reload

sudo systemctl enable pottymond-transmitter

The transmitter

The transmitter is the service responsible for subscribing to the (locally running) MQTT server and emitting status updates once per second. Each sensor was configured to report its status to a separate topic based on the location of the room, like “upstairs-east”. There are five bathrooms in the building, and I wanted to include timestamps of when the sensor last checked in and when the status last changed.

I wanted to keep the payload as small as possible, mostly to eliminate any problems with UDP packet fragmentation. I considered packing this information into a binary format, but in the interest of keeping the payload readable and easily parsable, I went with JSON. JSON encoders and parsers are readily available, and with only five bathrooms, the payload was still well under 1KB.

Mac OS menu bar app

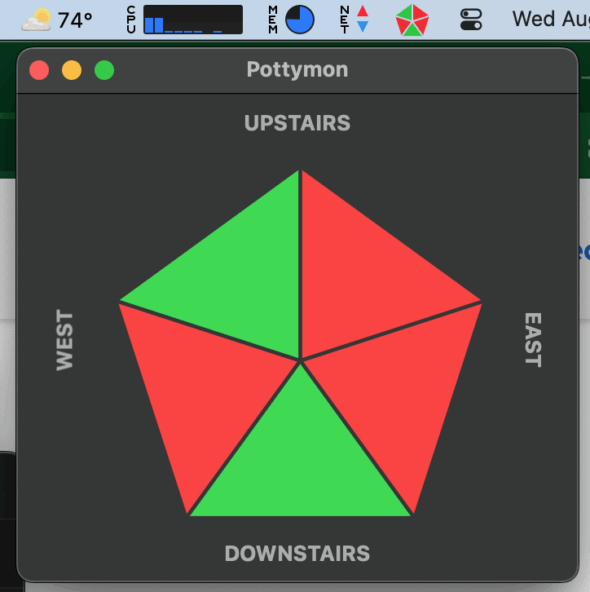

Since my ultimate goal was to have a menu bar app that showed all the bathroom statuses at a glance, I needed to come up with a compact representation. There are five bathrooms, and I wanted it to be easy to tell which indicator went with each room without having to memorize the order. I landed on a pentagon, since it gives each room equal weight and the slices visually map to the physical layout.

The heart of the app is the status receiver. Like the ruby version I described in my previous post, the receiver must join a multicast group in order to receive the datagrams. So it contains this function:

private func bindMulticastGroup() {

let groupAddress = NWEndpoint.hostPort(

host: NWEndpoint.Host("239.192.0.70"),

port: NWEndpoint.Port(rawValue: 1034)!)

guard let multicast = try? NWMulticastGroup(for: [ groupAddress ]) else { return }

let group = NWConnectionGroup(with: multicast, using: .udp)

group.setReceiveHandler(maximumMessageSize: 16384, rejectOversizedMessages: true) { [weak self] (message, content, isComplete) in

if let content {

let decoder = JSONDecoder()

decoder.dateDecodingStrategy = .iso8601

if let payload = try? decoder.decode(StatusDatagram.self, from: content) {

self?.rooms = payload.rooms

self?.lastReceived = Date.now

}

}

}

group.start(queue: .main)

self.group = group

}

Since the payload is JSON, I just needed to define some structs representing the shape of the data:

struct Room : Decodable {

let id: String

let status: String

let updated_at: Date

let changed_at: Date

}

struct StatusDatagram : Decodable {

let rooms: [Room]

}

The rest of the app is SwiftUI, except for the pentagon, which is an NSImage drawn using CoreGraphics.

One thing I really wanted to avoid with this UI was any kind of ambiguity. If a room was not heard from in a while, or if the transmitter went down, I wanted the UI to reflect that (and not get stuck on the last known status or some default status). So the app continuously checks the “updated at” time of each room, and if any room has not been heard from in 30 seconds then its slice will become gray.

Another side-effect of this project was the ability to notice when a bathroom door was erroneously closed! Each room is single-occupancy, so if a door is shut accidentally then it might go unused for hours. But pottymon will show a yellow status for any room that has been closed for an unreasonable amount of time.

More network debugging

Everything was working great until I took my laptop into a conference room. I have wired ethernet at my desk, but the app would stop receiving updates when I switched to wifi. But if I started the app while on wifi, everything worked normally (even after plugging back in at my desk).

I soon discovered that the multicast group membership was interface specific. When the app started, it would bind the group membership to whatever interface was active and highest priority at the time. Since wired connections generally have a higher service order (although this is configurable), the problem only manifested when a wired connection was active.

With some digging, and help from ChatGPT, I found an obscure networking API that would allow my app to be notified about network changes. It didn’t play real well with my SwiftUI app, since it required crossing the bridge to Objective-C land, but this is the contraption I ended up with:

import Foundation

import SystemConfiguration

let NETWORK_CHANGED_NOTIFICATION = "NetworkStatusChanged"

func callback(store: SCDynamicStore, changedKeys: CFArray, context: UnsafeMutableRawPointer?) -> Void {

DispatchQueue.main.async {

print("Network status changed!")

NotificationCenter.default.post(name: Notification.Name(NETWORK_CHANGED_NOTIFICATION), object: nil)

}

}

class NetworkMonitorDaemon {

private var thread: Thread?

init() {

start()

}

deinit {

stop()

}

private func start() {

var context = SCDynamicStoreContext(version: 0, info: nil, retain: nil, release: nil, copyDescription: nil)

self.thread = Thread {

guard let store = SCDynamicStoreCreate(nil, "NetworkStatusMonitoring" as CFString, callback, &context) else {

print("Failed to create SCDynamicStore")

return

}

let keys = ["State:/Network/Global/IPv4" as CFString] as CFArray

SCDynamicStoreSetNotificationKeys(store, keys, nil)

let runLoopSource = SCDynamicStoreCreateRunLoopSource(nil, store, 0)

CFRunLoopAddSource(CFRunLoopGetCurrent(), runLoopSource, .defaultMode)

while !Thread.current.isCancelled {

CFRunLoopRunInMode(.defaultMode, 1.0, false)

}

CFRunLoopStop(CFRunLoopGetCurrent())

}

self.thread?.start()

}

private func stop() {

self.thread?.cancel()

self.thread = nil

}

}

Basically, this registers a function to be called when the system network configuration changes. Whenever that happens, the function posts a “NetworkStatusChanged” notification within the app. The status receiver can then listen for that notification and restart its multicast group membership. This ensures it is always bound to the highest priority active network interface.

Final Thoughts

This iteration of pottymon has been operating for more than a year now. And although I don’t depend on it, it was a fun side project that also has some utility.