Lately, I have been investigating performance improvements for heatshrink, my data compression library for embedded systems. Since it may be processing sensor data in real-time, or compressing data as it transfers over a network, compression can directly impact overall throughput. After experiments with string search and indexing algorithms, I’ve settled on a particularly effective method, which I’m calling a “flattened linked-list index”.

Where’s the Time Going?

Before trying to speed it up, I measured where it was spending most of its time. Profiling revealed two hotspots:

- Detecting repeated patterns in recent history.

- Managing bit-buffering for its I/O.

Since heatshrink’s algorithm (LZSS) compresses by replacing duplicated data with references to recent copies (within a “sliding window” of history), it’s not surprising that searching for them represents a significant part of its overall work. Removing longer matches leads to better compression, so it often has to compare several potential options. (Here’s a great visualization of LZ77, a predecessor to LZSS, finding duplication in Poe’s “The Raven”.)

The bit-buffering hotspot is proportional to the amount of overall I/O, and necessary because heatshrink can suspend and resume compression between individual bits of input. Not much can be done to streamline that further, beyond copying whole bytes when possible.

What about the Usual Approaches?

That leaves trying to speed up the searching. While repeated substring search is a well-studied problem, the focus has historically been on large data sets, such as genetic data. Instead of one large buffer, heatshrink may search tens of thousands of small (<4 KB, typically <512 B) buffers per second, so constant factors for constructing indexes such as suffix trees or suffix arrays will overwhelm any improvements in search time. Big-O analysis is not the whole story when N stays small, because asymptotic effects don’t get a chance to make up for setup costs. Scaling down can require different trade-offs than scaling up. This is why advanced sorting algorithms still tend to fall back on insertion or bubble sort once they’ve recursed down to only a few elements – there’s less overhead to just compare and swap adjacent values in memory.

Also, heatshrink is designed to run in embedded environments, which usually have severely limited memory. For example, an Arduino Uno only has 2 KB of SRAM. This rules out lookup tables with several bytes of overhead per byte of data, as well as algorithms that need lots of working memory to construct the index. Other indexing algorithms, such as FM-index, do their own compression during their index construction. Doing the Burrows-Wheeler transform inside LZSS would add way too much additional processing!

Flattened Linked-List Index

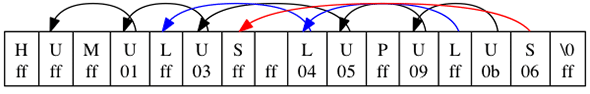

After trying several approaches, I’ve settled on a solution that fits heatshrink’s trade-offs particularly well, which I’m calling a “flattened linked-list index”. It builds a list of offsets into the data, so that the scanner can jump from each byte to its previous occurrence (if any), skipping over everything along the way that couldn’t possibly match. For example, an index for the text “HUMULUS LUPULUS” looks like this:

For each “U” except the first, the corresponding position in the index points to the position of the previous “U”. Similarly, the “L”s and “S”s refer to their previous locations. During compression, this helps find repeated substrings (such as “ULUS”) faster, by greatly reducing the number of positions in the data that have to be compared.

The relevant code is in do_indexing and find_longest_match. While I don’t believe it is novel, I haven’t seen it mentioned in indexing research, so it’s worth documenting.

- For an array of N bytes, B (“buffer”), create another array of N unsigned ints of appropriate size, I (“index”).

- Allocate another array of 256 unsigned ints, P (“previous”). This can be stack-allocated, since it will no longer be needed once indexing is complete.

- Set every byte in P to 0xFF.

- For i=0 to N-1, set I[i] to P[B[i]], then set P[B[i]] to i.

- Free P.

In C:

uint16_t *build_index(const uint8_t *buf, uint16_t size) {

uint16_t *index = malloc(size * sizeof(uint16_t));

/* (allocation checking omitted for space) */

uint16_t prev[256];

memset(prev, 0xFF, sizeof(prev));

for (uint16_t i = 0; i < size; i++) {

uint8_t byte = buf[i];

index[i] = prev[byte];

prev[byte] = i;

}

return index;

}

This constructs an array of flattened linked lists, where index[i] gives the offset to the previous instance of the byte at buf[i] in the buffer, or 0xFFFF if there aren’t any more. Since the encoder is scanning backward for the longest repeated substring, this allows it to skip over offsets whose first byte couldn’t possibly match. This index can be constructed in one pass with just an increment, a few pointer dereferences, and a few writes per byte, so it has very small constant factors. During typical operation, heatshrink may construct, briefly use, and then throw away 1024 indices per MB of data, so their construction has to be cheap. Since it’s just an array of 16-bit offsets, it only adds 2 bytes of overhead per byte in the buffer.

While not terribly sophisticated (a suffix array would help eliminate cases where the second byte differs), this is surprisingly effective. I timed compressing a 75 MB file with a client’s sensor data, and the flattened linked-list index took about 4.2 seconds on my laptop, compared to about 25 seconds for linear searching without an index. The next fastest option, using a counting sort variant to generate a binary-search-able permutation vector, took about 8.5 seconds. (heatshrink also compressed slightly faster than gzip, though it is trading a lower compression ratio for drastically lower memory usage.)

To see how much faster heatshrink could be without memory limits, I increased the size of prev from 256 bytes to 64 KB, so only matches of at least 2 bytes will be indexed (eliminating most false positives). That actually took longer (over 6 seconds!) due to the additional time in memset. Something extremely clever could potentially beat heatshrink’s current strategy (perhaps using dynamic programming to improve the index during searching), but its low overhead makes it very difficult.

Interesting idea for performance improvement in String indexing application. Thanks