Article summary

We recently revamped our backup system and decided that we wanted to have some manner of offsite backups in the cloud (as opposed to physical offsite backups).

This presented a unique challenge, however, as we have a fair amount of data to backup… our Internet connection speed isn’t the fastest in the world… and we needed the data secured.

Considering Our Options

Our initial thoughts were that we should use something like rsync to reduce the amount of data that needed to be transferred, minimizing backup times and the impact on our Internet connection.

However, we also wanted to use some sort of standardized cloud storage (such as Amazon S3 or Rackspace Cloud) so that we could store large amounts of data relatively inexpensively.

Furthermore, we wanted to secure our backups so that only we would be able to access them. While Amazon and Rackspace offer data encryption, they control the encryption mechanism and keys — this could be a potential problem if either suffered a security breach or compromise.

The Benefits of Duplicity

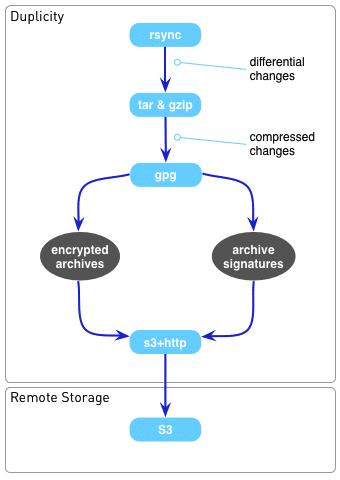

Fortunately, we found a nifty tool called ‘Duplicity‘ which allowed us to do everything that we wanted. Duplicity:

- Keeps signatures and deltas on files and directories so that only modified files and directories needed to be synchronized.

- Uses librsync to ensure that incremental backups only need to record differential changes to files and directories.

- Supports several different remote communication protocols, such as SCP, and storage methods, such as Amazon S3.

- Supports encryption of all backups with GnuPG (symmetric and asymmetric encryption methods supported).

- Allows full and incremental backups, preserving arbitrarily aged backups sets.

- Allows restoration from remote media without the need to retrieve all backup sets.

Duplicity has actually been around a long time (since 2002), with support for Amazon S3 since at least 2007. (It’s somewhat of a mystery why we didn’t find it sooner.)

Working with duplicity is very simple. Arguably, the most difficult bit involves setting up GnuPG. This Debian administrator article proved quite helpful in getting GnuPG set up.

It’s also quite easy to use Duplicity to do backups within your own network (using something like SCP), or to just store backups locally (using local file storage). There are a host of other options and features which Duplicity supports.

For Example…

Below are just a few examples of utilizing Duplicity to create and manage backups.

Two ways you can execute duplicity to push encrypt and backup data to Amazon S3:

# Duplicity: symmetric encryption with GnuPG, using given PASSPHRASE to create the symmetric cipher

# If a full backup hasn't been made in the last 30 days, create a new backup set starting with a full backup

# --full-if-older-than 30D : If a full backup hasn't been made in the last 30 days, create a new backup set starting with a full backup

# --volsize 250 : Create volumes with a size of 250 MB

# /current_backups/mysecurefiles : The directory to encrypt and backup.

# s3+https://my-backups/mysecurefiles : The Amazon S3 container ('my-backups') and directory ('mystuff') to place the encrypted backups in.

export AWS_ACCESS_KEY_ID=your_aws_access_key_id

export AWS_SECRET_ACCESS_KEY=your_aws_secret_access_key

export PASSPHRASE=secretpassphrase

duplicity --full-if-older-than 30D --volsize 250 /current_backups/mysecurefiles s3+https://my-backups/mystuff

# Duplicity: asymmetric encryption and signing with GnuPG, using the given keys to encrypt and sign the backups

# --encrypt-key : The ID of the GPG key you will be using to encrypt the data

# --sign-key : The ID of the GPG key you will be using to sign the backup files

# The encrypt-key needs to already be signed by the sign-key. Both keys need to be appropriately trusted.

# --full-if-older-than 30D : If a full backup hasn't been made in the last 30 days, create a new backup set starting with a full backup

# --volsize 250 : Create volumes with a size of 250 MB

# /current_backups/mysecurefiles : The directory to encrypt and backup.

# s3+https://my-backups/mysecurefiles : The Amazon S3 container ('my-backups') and directory ('mystuff') to place the encrypted backups in.

export AWS_ACCESS_KEY_ID=your_aws_access_key_id

export AWS_SECRET_ACCESS_KEY=your_aws_secret_access_key

export PASSPHRASE=secretsigningpassphrase

duplicity --encrypt-key "<encrypt-key-id>" --sign-key "<sign-key-id>" --full-if-older-than 30D --volsize 250 /current_backups/mysecurefiles s3+https://my-backups/mystuff

Two ways to check what files exist in the current backups up on S3:

# Duplicity: symmetric encryption with GnuPG, using given PASSPHRASE to access the encrypted files

# list-current-files : List files in the most recent backup set.

# s3+https://my-backups/mysecurefiles : The Amazon S3 container ('my-backups') and directory ('mystuff') where the encrypted backups reside.

export AWS_ACCESS_KEY_ID=your_aws_access_key_id

export AWS_SECRET_ACCESS_KEY=your_aws_secret_access_key

export PASSPHRASE=secretsigningpassphrase

duplicity list-current-files s3+https://my-backups/mystuff

# Duplicity: asymmetric encryption and signing with GnuPG, using the given keys to view/verify the backups

# list-current-files : List files in the most recent backup set.

# --encrypt-key : The ID of the GPG key you will be using to access the encrypted the data

# --sign-key : The ID of the GPG key you will be using to verify the signature on the backup files

# The encrypt-key needs to already be signed by the sign-key. Both keys need to be appropriately trusted.

# s3+https://my-backups/mysecurefiles : The Amazon S3 container ('my-backups') and directory ('mystuff') where the encrypted backups reside.

export AWS_ACCESS_KEY_ID=your_aws_access_key_id

export AWS_SECRET_ACCESS_KEY=your_aws_secret_access_key

export PASSPHRASE=secretsigningpassphrase

duplicity list-current-files --encrypt-key "<encrypt-key-id>" --sign-key "<sign-key-id>" s3+https://my-backups/mystuff

Two ways to restore files from remote backup on S3:

# Duplicity: symmetric encryption with GnuPG, using given PASSPHRASE to restore the encrypted files

# restore : Restores files from the most recent backup set.

# s3+https://my-backups/mysecurefiles : The Amazon S3 container ('my-backups') and directory ('mystuff') where the encrypted backups reside.

# /restored/files : The location to restore the files to.

export AWS_ACCESS_KEY_ID=your_aws_access_key_id

export AWS_SECRET_ACCESS_KEY=your_aws_secret_access_key

export PASSPHRASE=secretsigningpassphrase

duplicity restore s3+https://my-backups/mystuff /restored/files

# Duplicity: asymmetric encryption and signing with GnuPG, using the given keys to restore the backups

# restore : Restore files in the most recent backup set.

# --encrypt-key : The ID of the GPG key you will be using to access the encrypted the data

# --sign-key : The ID of the GPG key you will be using to verify the signature on the backup files

# The encrypt-key needs to already be signed by the sign-key. Both keys need to be appropriately trusted.

# s3+https://my-backups/mysecurefiles : The Amazon S3 container ('my-backups') and directory ('mystuff') where the encrypted backups reside.

# /restored/files : The location to restore the files to.

export AWS_ACCESS_KEY_ID=your_aws_access_key_id

export AWS_SECRET_ACCESS_KEY=your_aws_secret_access_key

export PASSPHRASE=secretsigningpassphrase

duplicity restore --encrypt-key "<encrypt-key-id>" --sign-key "<sign-key-id>" s3+https://my-backups/mystuff /restored/files

[…] you have backups. Unfortunately, the server included a database with important business data that was written just […]