Recently, my pair and I set up end-to-end Cypress testing for an existing web project. Setting up end-to-end testing from scratch for an existing application can be a bit intimidating, so we broke the process down into smaller steps.

Here, we’ll discuss the first step: identifying the services the project depends on and creating test versions of those services. Having this testing infrastructure set up lays the groundwork to run end-to-end tests locally.

The Existing Application

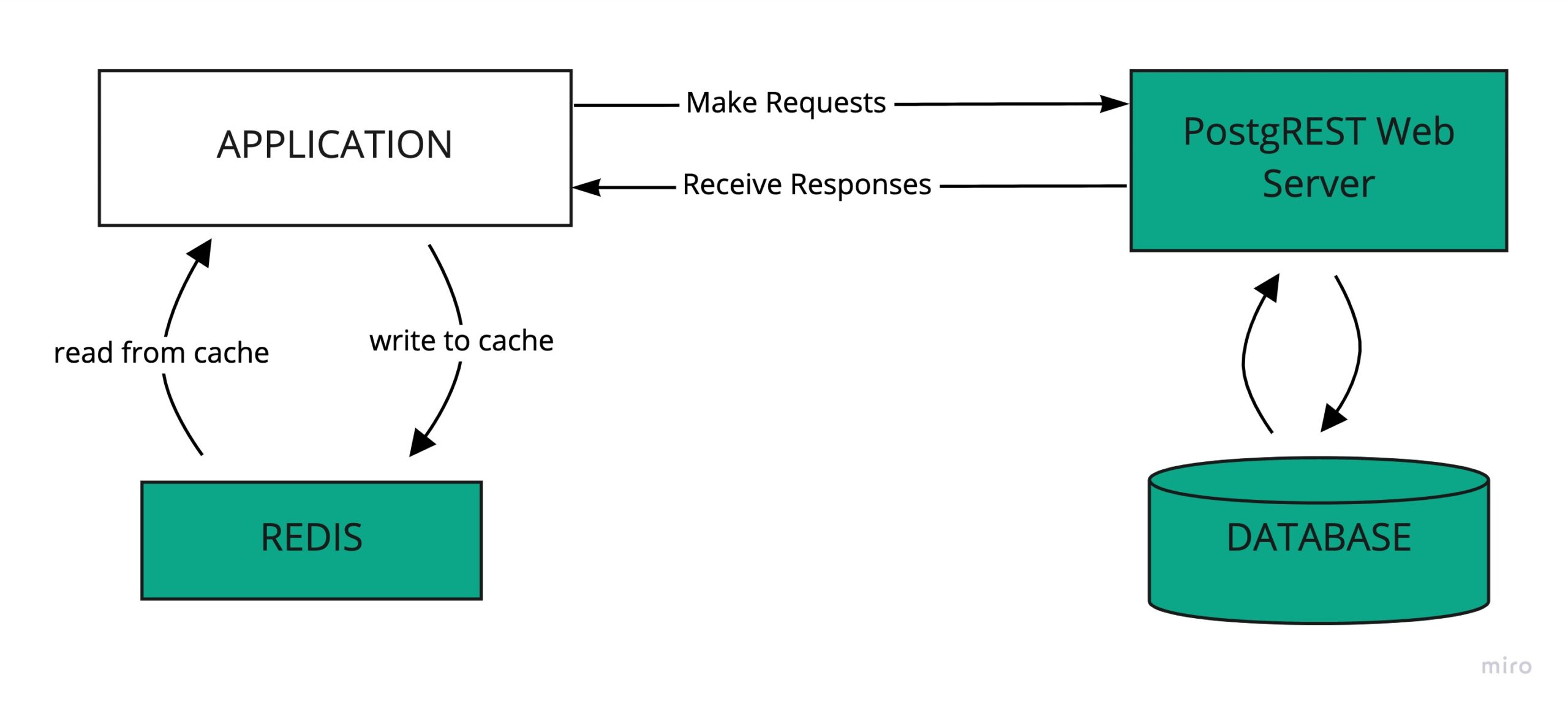

To start, we drew a diagram of the application and the major services the application depended on. It looked something like this:

The application uses Redis to cache API responses. It uses a PostgreSQL database and a PostgREST web server to provide a RESTful API for the database.

Using Docker to Set Up the Infrastructure

To set ourselves up for end-to-end tests, we wanted to create mocked or local test versions of all those external dependencies (Redis, database, PostgREST webserver).

Docker lets us easily spin up test versions of those external dependencies by creating an isolated network with a container for dependency. We can then hit those Docker test containers when running the end-to-end tests. Having all the containers for the external dependencies existing in the same network allows us to verify that different parts of the application — say the caching and the database queries — work together correctly.

So how do we go about spinning up a database, a PostgREST API, and a Redis instance in Docker? Well docker-compose lets us define multi-container Docker applications via a YAML file. To start all of those containers when executing our tests, we can simply run docker compose up.

To describe the containers Docker needs to run, we created a docker-copose-test.yml file. Our file looks like this:

version: "3"

services:

redis:

container_name: redis-test

image: redis:3.2

ports:

- "6380:6379"

db:

container_name: db-test

image: postgres:11.13

environment:

POSTGRES_DB: test

POSTGRES_HOST_AUTH_METHOD: trust

ports:

- "5433:5432"

postgrest:

container_name: postgrest-test

image: gcr.io/prepsports-staging/postgrest:5

ports:

- "3001:3001"

environment:

PGRST_DB_SCHEMA: api

PGRST_DB_URI: postgres://authenticator@db-test:5432/test

PGRST_JWT_SECRET: tipoff-tenured-scorpion-razz-credible-macro-parole

PGRST_SERVER_PROXY_URI: https://postgrest:3001

PGRST_SERVER_PORT: 3001

PGRST_DB_ANON_ROLE: anonymous

depends_on:

- db

This docker compose file defines three docker containers:

Redis

Creates an instance of Redis. The application uses Redis for caching.

We used the public Redis image from docker hub. By default, Redis runs on port 6379. Since we had a development Redis container already running on port 6379 on our local machines, we forward the port Redis is running on in the container (6379) to port 6380 locally. When running our end-to-end tests, we then set our Redis port env variable to be 6380.

DB

Creates a postgres instance.

We used the public PostgreSQL image from docker hub. By default, PostgreSQL runs on port 5432. Again, since we already had a development PostgreSQL container running locally on port 5432, we forward the port PostgreSQL is running on in the container (5432) to port 5433 locally. Then, we connect to the test PostgreSQL instance locally on port 5433.

We set a couple of environment variables for the PostgreSQL image. We set the POSTGRES_DB environment variable to be the name of the test database and set the POSTGRES_HOST_AUTH_METHOD environment variable to “trust” to enable connecting to the test database without a password.

PostgREST

PostgREST provides a RESTful API for the PostgreSQL instance. We used the PostgREST image from the docker registry.

To connect PostgREST to our PostgreSQL instance, we set the PGRST_DB_URI env variable to point to our PostgreSQL container “db-test.”

We set the PGRST_SERVER_PORT to 3001 so that PostgREST would run on port 3001 in the container. Then, we forwarded port 3001 in the container to port 3001 on the local machine. We set the env variable for the API host to be localhost:3001.

We also specified the JWT_SECRET. When running end-to-end tests, the JWT_SECRET env variable will have to match the JWT_SECRET specified in the compose file.

That’s all the infrastructure we needed to be able to run end-to-end tests! To start up the infrastructure and get the database ready to go (migrated etc) we wrote a simple script.

This script:

- Creates and starts up the database, PostgREST, and Redis containers in detached mode (meaning it runs the containers in the background)

- Waits for the database to start up

- Once the database starts up, runs the database migrations

- Restarts the PostgREST server after the database migrations to pick up changes to the database schema

#!/usr/bin/env bash

dir="$(cd "$( dirname "${BASH_SOURCE[0]}")" && pwd)"

docker-compose -f docker-compose.test.yaml up -d

echo "✅ Started DB, PostgREST, Redis Containers"

echo 'Waiting for the test postgres to start up...'

for i in $(seq 0 4); do

if ! echo 'select 1;' | psql -h localhost -U postgres -p 5433 -d test > /dev/null 2>&1; then

sleep $((2**i))

echo 'Still waiting for postgres test database be booted up on port 5433 😑'

else

break

fi

done

echo "Running sqitch deploy on test db..."

if ! sqitch deploy local-test > /dev/null 2>&1; then

echo "Unable to deploy test sqitch migrations!"

exit 1

fi

echo "✅ Ran Sqitch Deploy"

# Need to restart the postgrest container to pick up changes to the DB schema after running migrations.

docker restart postgrest-test

echo "✅ Restarted PostgREST to pick up DB changes"

And with that, our infrastructure is all set up, and our database is migrated and ready to go!

Setting up End-to-End Cypress Testing from Scratch

Now that the database is ready to go, the next step is generating some test data for our e2e tests to run against. We’ll cover that in the next post.