Recently, I heard about a feature of the OpenAI API: function calling. This piqued my interest for its ability to have one of OpenAI’s Large Language Model (LLM) call functions inside my application. Let’s look at function calling and how we can use it to provide application-specific data to the LLM.

Function Calling

The function calling tool is particularly intriguing because it extends the LLM capabilities beyond pre-existing knowledge. You could use a function to provide additional information to the LLM by fetching data from your data store or information based on application-specific logic.

Here’s a simplified view of what happens when using function calling:

- Defining Functions. You define functions the LLM can call. These functions include metadata about their purpose and parameters.

- AI Invocation. The LLM identifies the need to call a function and selects the appropriate function(s) based on the context of the conversation.

- Function Execution. The application executes the function (e.g., retrieving information from a database).

- Returning Results. The executed function’s results are passed back to the LLM, which incorporates this information into its response to the user.

Weather Demo

To demonstrate the use of function calls, I’ve set up a simple web application. It interacts with users and has a weather function implemented in our application code, in this case, JavaScript. For simplicity, the weather data is fake and just returns a random number. Still, it should be enough of an example of function calls. Anything you can do with a normal function should be achievable with OpenAI function calling.

The backend code uses Express, and when it receives a post request to /chat, it calls the Chat Completion API; based on the response from the API call, it either returns the weather information to be incorporated into the response or, if the LLM has determined the response should not use the weather function, returns the chat completion to the front end.

Note how, when calling the chat completion API, we define a function called get_current_weather that describes the use of this function in our code and any requirements for calling it; in our case, it is the city and state we want to know the (fake) weather of.

import fetch from "node-fetch";

import express from "express";

const app = express();

const port = 3000;

const apiKey = "<api-key>"

app.use(express.json());

app.use((_, res, next) => {

res.header("Access-Control-Allow-Origin", "*");

res.header(

"Access-Control-Allow-Headers",

"Origin, X-Requested-With, Content-Type, Accept"

);

next();

});

app.post("/chat", async (req, res) => {

const { message } = req.body;

const response = await processChatRequest(message);

res.json({ message: response });

});

app.listen(port, () =>

console.log(`Server running at http://localhost:${port}`)

);

const processChatRequest = async (location) => {

const messages = [

{

role: "user",

content: `${location}. Use the api call if needed, where needed list thinks in bulleted lists`,

},

];

let response = await callChatCompletion(messages);

if (

response.choices &&

response.choices[0].finish_reason === "function_call"

) {

const functionCall = response.choices[0].message.function_call;

if (functionCall.name === "get_current_weather") {

const location = JSON.parse(functionCall.arguments).location;

const weather = `The weather in ${location} is ${Math.floor(

Math.random() * 101

)} degrees.`;

messages.push({

role: "function",

name: functionCall.name,

content: JSON.stringify(weather),

});

response = await callChatCompletion(messages);

return response.choices[0].message.content;

}

} else {

return response.choices[0].message.content;

}

};

const callChatCompletion = async (messages) => {

const response = await fetch("https://api.openai.com/v1/chat/completions", {

method: "POST",

headers: {

Authorization: `Bearer ${apiKey}`,

"Content-Type": "application/json",

},

body: JSON.stringify({

model: "gpt-3.5-turbo",

messages: messages,

functions: [

{

name: "get_current_weather",

description: "Get the current weather in a given location.",

parameters: {

type: "object",

properties: {

location: {

type: "string",

description: "The city and state, e.g. San Francisco, CA",

},

},

required: ["location"],

},

},

],

}),

});

return response.json();

};

The frontend is straightforward; it uses fetch to call the backend endpoint and adds the chat request and response to the page:

<!DOCTYPE html>

<html lang="en">

<head>

<meta charset="UTF-8" />

<title>Wall Street Bets Updates</title>

<style>

.question {

font-size: 0.9em;

color: #666;

}

</style>

</head>

<body>

<h1>OpenAI Assistant Functions</h1>

<form id="form">

<input type="text" id="input" placeholder="Enter Input" />

<button type="submit">Send</button>

</form>

<div id="chat"></div>

<script>

document.addEventListener("DOMContentLoaded", function () {

const form = document.getElementById("form");

const chatDiv = document.getElementById("chat");

form.addEventListener("submit", function (e) {

e.preventDefault();

const input = document.getElementById("input").value;

if (input) {

addQuestion(input);

sendMessage(input);

document.getElementById("input").value = "";

}

});

function addQuestion(location) {

const questionDiv = `<div class="question">${location}</div>`;

chatDiv.innerHTML += questionDiv;

}

function sendMessage(message) {

fetch("http://localhost:3000/chat", {

method: "POST",

headers: {

"Content-Type": "application/json",

},

body: JSON.stringify({ message }),

})

.then((response) => response.json())

.then((data) => {

console.log(data);

const responseDiv = `<div>${data.message}</div><hr/>`;

chatDiv.innerHTML += responseDiv;

})

.catch((error) => console.error("Error fetching weather:", error));

}

});

</script>

</body>

</html>

The Chat

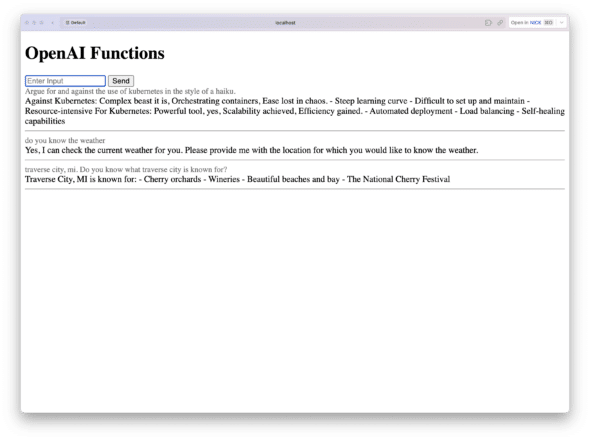

Let’s break down the chat I had with this demo app:

- When asked to write a haiku, it just responds without requesting weather data from our app since it’s unrelated to the weather — neat!

- When asked if it knows anything about the weather, it knows, based on the function definition, that the location is required to call the function. So, it prompts for the location before calling the function — neat!

- Once you have provided the location, the LLM will respond by telling the application to run the get weather function. Note: The response included more about the city, but the weather data for the

get_current_weatherfunction returned was used to complete the chat — neat!

OpenAI’s Function Calling: A Powerful Tool

OpenAI’s function calling can be a powerful tool for integrating LLMs into applications, giving the LLM a hook into real-time application data or logic. Function Calling can enable a more nuanced and interactive dialogue between users and LLMs. I am excited to see how people use this feature to integrate LLMs into their applications.