Article summary

Sometimes, we work with APIs that require whitelisting of origin IP addresses for access. This can prove challenging when applications run on SaaS platforms hosted in the public Cloud—you cannot be guaranteed that your application will make requests from a consistent IP address (or range of IP addresses).

For example, hosting on Heroku means that your origin IP address could conceivably be any IP address in the glut of AWS address spaces.

To work around this, we can set up a secure HTTP proxy with a static IP that we use to proxy all API requests from our application.

Some Heroku add-ons are available to help you achieve this very easily, but the costs for these add-ons can quickly balloon as the number of proxy requests increase. The add-ons are great for development, but when we start to consider production scenarios, a self-managed HTTP proxy can be a nice alternative.

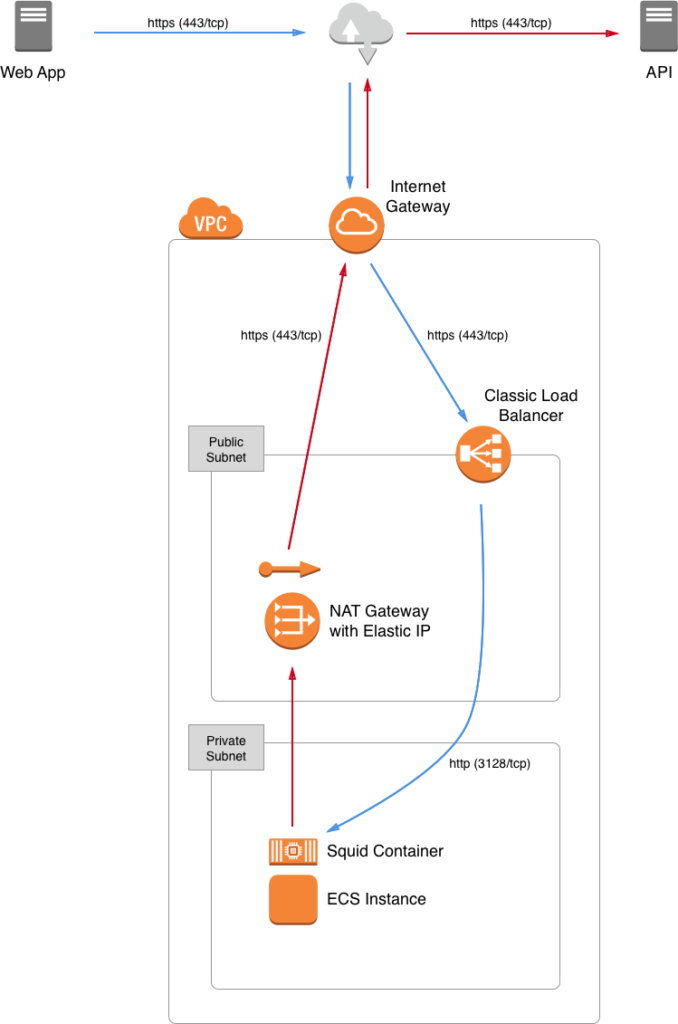

Architecture

The secure HTTP proxy that I built runs on AWS ECS using Squid. I rely on an AWS Classic Load Balancer to provide SSL termination for Squid, and then Squid connects to the outside world via a NAT gateway with an Elastic IP address. There is no requirement that Squid run in a container on ECS, but this makes deployment and maintenance convenient.

Security

Unlike traditional HTTP proxies, my proxy requires clients to use SSL to connect to the HTTP proxy. This avoids leaking information about proxy clients (and proxy credentials). Once the client has connected, the HTTP proxy handles standard HTTP requests, and also HTTPS requests via CONNECT. Not all client libraries support HTTPS-enabled proxies, but more are beginning to do so.

acl SSL_ports port 443

acl Safe_ports port 80

acl Safe_ports port 44

acl CONNECT method CONNECT

http_access deny !Safe_ports

http_access deny CONNECT !SSL_ports

Authentication

I configured Squid to require explicit authentication in order to connect. For simplicity, I used Basic auth (which makes connecting over HTTPS all the more important):

# Only allow proxy_auth (authenticated) users

acl authenticated proxy_auth REQUIRED

http_access allow authenticated

# And finally deny all other access to this proxy

http_access deny all

Squid can be configured to support Basic auth with standard htpasswd files using the ncsa_auth helper:

auth_param basic program /usr/lib/squid/basic_ncsa_auth /etc/squid/passwd

auth_param basic children 5

auth_param basic realm Proxy

auth_param basic credentialsttl 2 hours

auth_param basic casesensitive off

(See the full squid.conf below).

Docker

I opted to wrap everything up into a Docker container in order to make adjusting and updating the configuration easy:

FROM alpine:3.7

ENV BASIC_AUTH_USER admin

ENV BASIC_AUTH_PASSWORD secret

RUN apk update && apk add apache2-utils squid && rm -rf /var/cache/apk/*

COPY squid.conf /etc/squid/squid.conf

COPY entrypoint.sh /usr/local/bin/entrypoint.sh

RUN chmod 755 /usr/local/bin/entrypoint.sh

EXPOSE 3128/tcp

ENTRYPOINT /usr/local/bin/entrypoint.sh

This also allows updating the credentials for authentication on the fly via an environment variable:

#!/bin/sh

#File: entrypoint.sh

/usr/bin/htpasswd -b -c -B /etc/squid/passwd "${BASIC_AUTH_USER}" "${BASIC_AUTH_PASSWORD}"

/usr/sbin/squid -f /etc/squid/squid.conf -NYCd 1

Use

Now that I have my secure proxy up and running, our web application can make use of it:

// File: example.js

var http = require("https");

var req = http.request({

host: 'secure-proxy.example.com',

port: 443,

protocol: 'https:',

headers: {

'Proxy-Authorization': `Basic ${new Buffer('username:password').toString('base64')}`

},

method: 'GET',

path: 'https://icanhazip.com'

}, function (res) {

res.on('data', function (data) {

console.log(data.toString());

});

});

req.end();

And…

jk@GERTY ~ $ node example.js

Response:

1.2.3.4

🎉 Tada! We now have a secure HTTP Proxy with a static IP.

Resources…

Squid

Here is the full squid.conf:

auth_param basic program /usr/lib/squid/basic_ncsa_auth /etc/squid/passwd

auth_param basic children 5

auth_param basic realm Proxy

auth_param basic credentialsttl 2 hours

auth_param basic casesensitive off

acl SSL_ports port 443

acl Safe_ports port 80

acl Safe_ports port 443

acl CONNECT method CONNECT

acl authenticated proxy_auth REQUIRED

http_access allow authenticated

http_access deny !Safe_ports

http_access deny CONNECT !SSL_ports

http_access allow localhost manager

http_access deny manager

http_access deny to_localhost

http_access allow localhost

# And finally deny all other access to this proxy

http_access deny all

http_port 3128

coredump_dir /var/cache/squid

cache deny all

AWS

For the AWS Classic Load Balancer, I simply configured the listener for SSL (secure TCP) and provided an ACM (Amazon Certificate Manager) certificate. The listener forwards SSL-terminated traffic as standard TCP to Squid running as a container on an ECS instance on port 3128 (the default). I used a Classic Load Balancer (instead of the Network or Application varieties) because it provides transport security (SSL) without interfering with the application layer (HTTP). The Network Load Balancer does not provide support for SSL (only TCP), and the Application Load Balancer intercepts HTTP requests and makes new HTTP requests to the configured backend, which can make working with Squid difficult.

From the ELB listener configuration in JSON:

"Listener": {

"InstancePort": 3128,

"SSLCertificateId": "arn:aws:acm:us-east-1:123456789123:certificate/some-cert-arn",

"LoadBalancerPort": 443,

"Protocol": "SSL",

"InstanceProtocol": "TCP"

},