If your JavaScript monorepo deploys node modules, you may be deploying more than you need. Here’s how my software team and I slimmed ours down.

Monorepo and Workspaces

Though the term “monorepo” technically has a more general definition, in the JavaScript ecosystem it typically refers to a git repository holding a small number of related projects, usually organized with the “workspaces” feature of a popular package manager. This post (and my current project) use pnpm, but the same concepts apply to other package managers (yarn, npm).

Here’s an example workspace structure, holding a shared library and two apps:

├── package.json ├── pnpm-lock.yaml ├── shared-lib │ └── package.json ├── cloud-app │ └── package.json └── client-app └── package.json

Note that the projects depend on each other: both client-app and cloud-app reference shared-lib.

Shared node_modules

Each project’s package.json file expresses its own set of third-party dependencies. For efficiency, package managers prefer to produce a single shared lock file and a single shared node_modules directory at the top level. Typically, when you run e.g. pnpm install, that will pull down all the dependencies for all the projects. This is usually what you want as a developer.

For distribution, though, this behavior is likely not what you want. Say you’re publishing client-app in the example above: there’s no need to bring along the third-party dependencies of cloud-app.

Side note: bundling. This post is about optimizing the

node_modulesyou’re publishing. If your distributed artifacts don’t contain any, good for you! You don’t need this.

Pruning Projects

So, given a multi-workspace pnpm project, and an intent to build and publish a single project, how can we narrow this down? I’ve often wanted a command like package-manager install --subtree-beginning-with client-app, that would pull down dependencies for client-app and shared-lib, but exclude cloud-app. I’m sure this will exist someday, but as far as I know, it doesn’t yet.

Thankfully, Turbo has filled this need with a prune command:

> pnpm turbo prune client-app Generating pruned monorepo for client-app in /Users/jrr/example-project/out - Added client-app - Added shared-lib

This produces a reduced copy of your repo, excluding unneeded projects. It even filters down the lockfile!

Pruning devDependencies

A second kind of pruning has been around longer: separating development dependencies from production dependencies. I’ve seen many projects that never bothered with this distinction, but if you’re at all sensitive to the size of your deployed node_modules, you should look into it. In short, package managers can be asked to skip installing devDependencies to avoid deploying development tools like compilers, test frameworks, etc.

I should warn you to be careful when you make this change in an existing project. The first time you slice off what you think are development dependencies, you may learn that some of them are, in fact, needed by the deployed app at runtime :).

Docker

On my current project, our Git repository contains two applications: one deployed to the cloud, and another running on devices. The device deployment artifacts are Docker images, with conspicuous file sizes and deploy times.

We recently applied both of the above pruning techniques to great effect, using a multistage Dockerfile based on Turbo’s guidance.

In hopes that this may be useful for your project, here’s a walk through the many stages of our Dockerfile structure:

Base

There’s not much here. This is the lowest common denominator of all the stages.

FROM node:20-bookworm-slim as base

WORKDIR /usr/src/app

RUN npm i -g [email protected]

Project Pruning

First, we copy in the project sources from disk, then run turbo prune to produce a couple of pruned subtrees of the project, and copy them into separate named stages:

FROM base as prune

RUN npm i -g [email protected]

COPY . .

RUN turbo prune --docker device-app

FROM base as pruned_project_files

COPY --from=prune /usr/src/app/out/json/ .

FROM base as pruned_sources

COPY --from=prune /usr/src/app/out/full/ .

COPY --from=prune /usr/src/app/tsconfig.json .

COPY --from=prune /usr/src/app/out/pnpm-lock.yaml .

node_modules

This is where the devDependencies vs dependencies installation happens. First, we start with the smaller set (--prod true):

FROM pruned_project_files as node_modules_for_run

RUN install_packages wget python3 make g++

RUN pnpm install --prod true

Then we derive another stage from that and install the rest (--prod false):

FROM node_modules_for_run as node_modules_for_build

RUN pnpm install --prod false --force

Build

To build the application, we take all the sources and copy in the needed node_modules.

FROM pruned_sources as build

COPY --from=node_modules_for_build /usr/src/app/node_modules /usr/src/app/node_modules

COPY --from=node_modules_for_build /usr/src/app/modules/shared/node_modules /usr/src/app/modules/shared/node_modules

COPY --from=node_modules_for_build /usr/src/app/apps/device/node_modules /usr/src/app/apps/device/node_modules

ARG example_build_input

ENV EXAMPLE_BUILD_INPUT=${example_build_input}}

RUN pnpm turbo --filter device-app build

Run

The final stage copies in the smaller set of node_modules and the built artifacts.

FROM pruned_sources as run

RUN install_packages vim

ENV PORT=80

HEALTHCHECK --start-period=30s --timeout=30s --interval=30s --retries=3 \

CMD curl --silent --fail localhost:80

COPY --from=node_modules_for_run /usr/src/app/node_modules /usr/src/app/node_modules

COPY --from=node_modules_for_run /usr/src/app/apps/device/node_modules /usr/src/app/apps/device/node_modules

COPY --from=node_modules_for_run /usr/src/app/modules/shared/node_modules /usr/src/app/modules/shared/node_modules

COPY --from=build /usr/src/app/apps/device/build /usr/src/app/apps/device/build

CMD ["pnpm", "--filter", "device-app", "start"]

So many stages

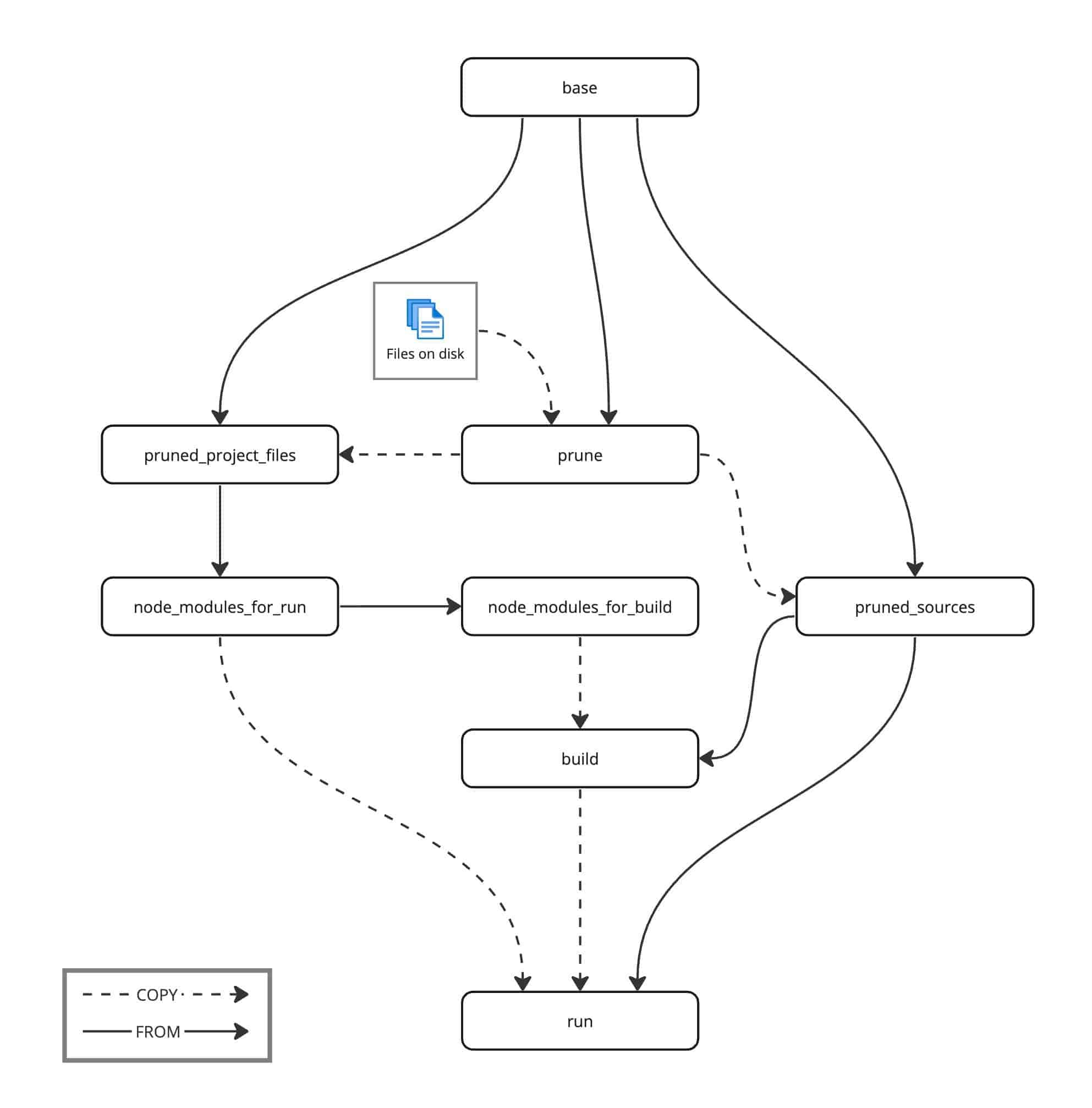

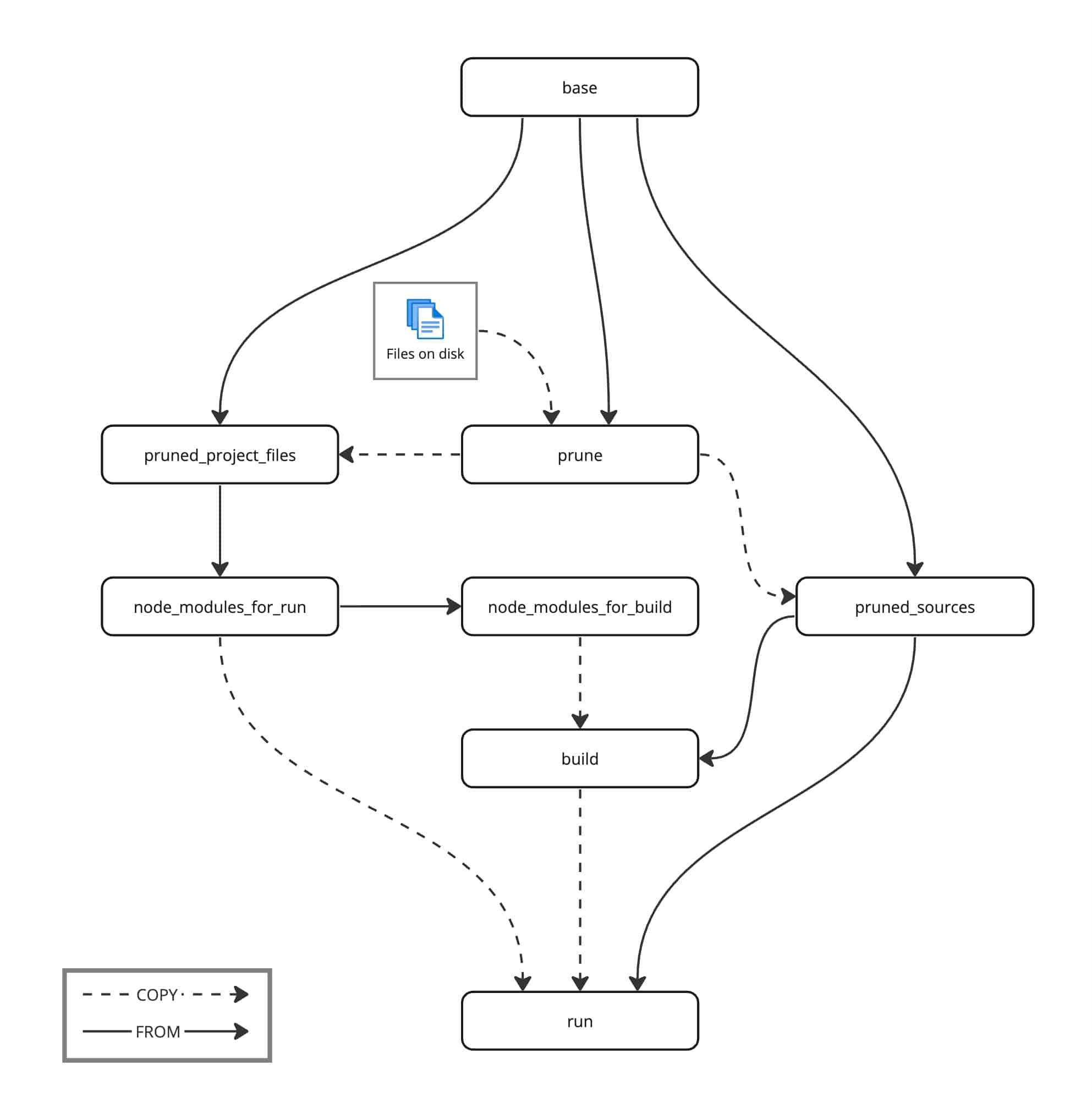

In case you lost track, here’s a diagram:

Yes, it’s a lot of stages, but they’re cheap. I value being able to name intermediate states, and they work nicely with Docker’s caching.

Results

Applying these optimizations to our Docker build reduced the image size by half (!). A working example of this structure can be found on GitHub at jrr/prune-monorepo-example.