The process of identifying text within an image by way of a computer is called optical character recognition (OCR). Loads of software applications leverage OCR engines to provide users with incredibly useful features they use daily. Depositing checks via mobile app, searching printed text (i.e. Google Books), and automating data entry are all examples of ways this technology can be used.

One engine that helps developers create these kinds of features is called Tesseract. Tesseract is a popular open-source OCR engine, originally developed by HP and later Google. Tesseract.js is a Javascript port of Tesseract that brings the powerful text recognition tool to the browser. Running the engine in the browser can allow for quicker text recognition results and reduced network traffic because the images don’t have to be sent to the server before they can be processed.

Here’s a short tutorial that demonstrates how to capture frames from a webcam and then process those frames with the text recognition engine.

1. Create a new project.

npx degit sveltejs/template my-ocr-projectNote: I’m using Svelte, but this code won’t differ drastically language by language.

2. Add Tesseract.js as a dependency.

npm install tesseract.js3. Set up the bones of the app.

Since the goal is to grab frames from a webcam, you’ll need to add code that gets the user’s media stream. This involves adding canvas and video elements to the DOM as well. There’s no need to show both the video and canvas at the same time, so you can opt to hide the video element if you’d like. You’ll also want to add a button to trigger a frame capture and an area to display the output text from the processed image.

<script lang="javascript">

let video, canvas, ctx;

let running = false;

let output = "";

const start = async () => {

try {

const stream = await navigator.mediaDevices.getUserMedia({

video: true,

});

video.srcObject = stream;

video.play();

video.onresize = () => {

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

running = true;

};

} catch (error) {

/* Do you have a webcam? */

console.log(error);

}

// TODO: Initialize Tesseract worker

};

const capture = async () => {

// TODO: Capture and process image

};

// Start the webcam

start();

</script>

<main>

<h1>OCR with Tesseract.js!</h1>

<video bind:this={video} style="display: none" />

<canvas bind:this={canvas} />

{#if running}

<button on:click={capture}>Capture</button>

<div><b>output:</b> {output}</div>

{:else}

<div>Loading...</div>

{/if}

</main>

4. Import and Init Tesseract.

Import Tesseract and create a worker that will handle processing.

import { createWorker } from "tesseract.js";

let video, canvas, ctx, worker;

let running = false;

let output = "";

const start = async () => {

...

// Initialize Tesseract worker

worker = createWorker({

logger: (m) => console.log(m),

});

await worker.load();

await worker.loadLanguage("eng");

await worker.initialize("eng");

};

5. Capture and Process.

Lastly, you’ll need to capture a frame and process the image with the Tesseract library. This can be accomplished by first drawing the image to the canvas, then calling recognize with the canvas’ data URL.

const capture = async () => {

ctx = canvas.getContext("2d");

ctx.drawImage(video, 0, 0, canvas.width, canvas.height);

const img = canvas.toDataURL("image/png");

const {

data: { text },

} = await worker.recognize(img);

output = text;

};

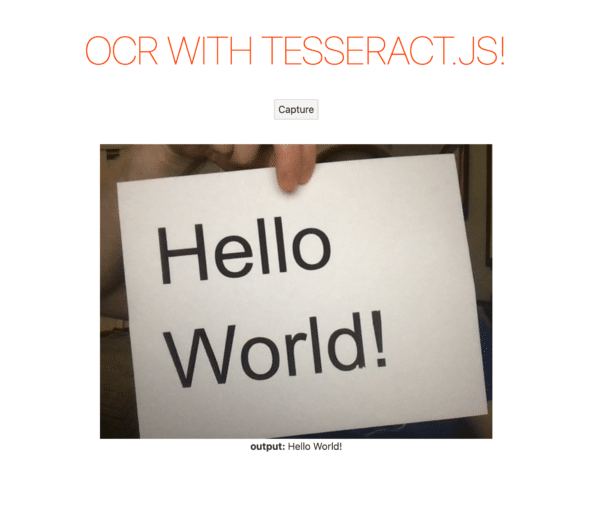

Finally, run the application with npm run dev. Once you allow the site to use your camera, you can click the “capture” button to start capturing and processing images. I recommend holding up different books or pieces of paper to test the performance.