Article summary

Artificial intelligence and machine learning: I can think of no other tech-related topic more talked about within the last year. Though ChatGPT and related systems have made a tremendous splash with the public, generative AI and machine learning are not new topics. They are fields that have been studied for decades. And within the last 10 years, service providers have built out the tooling and infrastructure to support artificial intelligence and machine learning systems.

In this post, I’ll briefly survey three platforms already using this technology: Azure Machine Learning, Databricks Machine Learning, and Amazon Bedrock.

Disclaimer:

- In my reading, I’ve found the terms “machine learning” and “artificial intelligence” used interchangeably. For the purposes of this post, the two terms are synonymous as well.

- Below I will note various features and benefits of the various offerings. I’m not advocating for any of these in particular. I leave it to the reader to decide what is best to use within their context.

AI cloud provider survey

Microsoft Azure Machine Learning

I find that Microsoft’s Azure Machine Learning marketing site is quite good at describing their offering:

- prepare your data,

- train a model,

- wire it into your application, and

- manage and monitor it.

Reading through the table of contents in the Azure ML documentation paints a similar picture to the above. Within this documentation, Microsoft has pretty good tutorials and samples related to making use of these capabilities. For example, the “Train a model” tutorial walks through the steps needed — in both the GUI and in code — to get a model trained using their resources. Speaking of Azure’s resources, they appear to have plenty of hardware, like big GPUs, to get the work done.

A feature that stands out to me is a GUI designer for creating a training workflow. In this example, the user uses a GUI editor to create a flow of preparing data. They’ll split it into training and test datasets, train a model, and evaluate it. It looks snazzy, though my experience with these GUI workflow editors is that they work until they don’t.

If it has the features you need, great. But as soon as you need something not offered by the GUI, you may run into trouble. What is not clear to me here is if there is an “escape hatch” available, e.g. if a feature isn’t available via the GUI, is there a text-based editor I can use to fine-tune (pun intended) the workflow? In summary, your mileage may vary with this feature.

Additionally, it’s unclear if Microsoft provides any base models to use or fine-tune. It looks like it may be possible to provide an existing model to Azure but this capability is not obvious to me.

Overall, Microsoft’s offering appears fairly complete. For customers already using and doing business with Azure, this looks like a great solution.

Databricks Machine Learning

Databricks also has a machine learning offering. Similar to what I described above, Databricks’s system helps prepare data, use it to train new models, then deploy those models for usage.

An important distinction between Databricks and Azure, AWS, or Google Cloud is that Databricks runs on top of either of these services. When browsing Databricks’s documentation, note that in the top right corner of your window, a dropdown lets you select which cloud service you’re using. Changing this updates the Databricks documentation to be fine-tuned (another pun intended) for your particular cloud service.

A helpful analogy I’ve heard is that Azure, AWS, or Google Cloud are like a Swiss army knife with all the tools opened and poking out in every direction. If you know how to use it carefully, you can do just about anything, as long as you don’t cut yourself. Services like Databricks smooth out this raw experience in exchange for less customizability. Services like Databricks also provide more tooling to make things easier than using the raw cloud service.

For a team already using Google Cloud, AWS, or Azure, it is worth checking out Databricks. See how it can supplement or smooth out what your cloud service provides.

Lastly, Databricks recently released Dolly, the “world’s first truly open instruction-tuned LLM.” Databricks created an entirely new dataset and model based on 15,000 entries for the open-source community to use. This helps avoid licensing and fair use challenges associated with other popular models. It’s also available for commercial use. The various Dolly models and datasets are available on Databricks’s Hugging Face page.

Amazon Bedrock

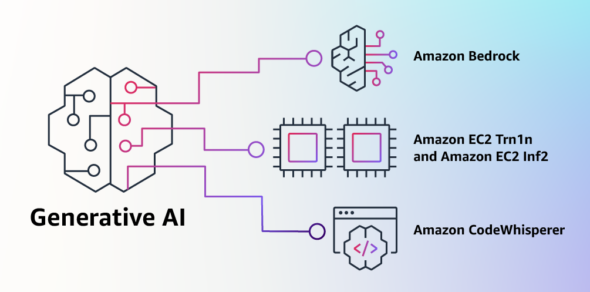

Lastly, I’ll discuss Amazon’s Bedrock suite. In their recent announcement post, Amazon describes three new systems for generative AI: Bedrock, Titan, and CodeWhisperer.

Bedrock provides straightforward access to several foundational models from organizations like Anthropic, Stability AI, and Amazon. And of course, Bedrock also includes some facilities to train these baseline models for your own specific purposes.

Titan is more about providing not only foundational models but also facilities for customizing those models with one’s own fine-tuning. Since fine-tuning large datasets is computationally expensive, many of us do not have the resources to do it on our own. With Titan, Amazon is providing the expensive resources needed for large-scale tuning.

Amazon is well-positioned to provide an extraordinary amount of computing resources to help with this task. For example, Amazon boasts that Titan can provide “… Trn1 instances in UltraClusters that can scale up to 30,000 Trainium chips (more than 6 exaflops of compute) located in the same AWS Availability Zone with petabit scale networking.” Those are some serious numbers! It will be interesting to see how Titan plays out in practice.

And finally, CodeWhisperer. CodeWhisperer looks similar to developer tooling like GitHub Copilot. CodeWhisperer says it can help with 15 programming languages across seven development environments. While this is hardly unique — see Copilot — CodeWhisperer appears to have two distinguishing features. Those are automatic security scanning and an automatic reference tracker for open-source code. This should help developers stay more intentional about what open-source code they are using, and what the relevant licenses and terms are, and ultimately decide if it is appropriate to use it for the project at hand.

I included the Bedrock suite last in this post because, as of May 2023, it is in a closed beta. You can get on the waitlist, but it is not defined how long you’ll be waiting before you get access. I have personally not received access myself yet. Nevertheless, for systems already running on AWS, this looks like the no-brainer AI tooling to start with.

Generative AI and the Future

ChatGPT’s release last year has superheated the conversation around generative AI, its possibilities, its downsides, and its risks. Several of the podcasts I regularly listen to, which are generally unrelated to AI, are now abuzz about these technologies and how they affect seemingly unrelated contexts. But a lot of talk about it doesn’t mean it is easy to use. Thankfully, Microsoft, Databricks, and Amazon are making it a bit easier to grab onto something concrete and start using this technology sooner than later.